Upgrade the database to 4x

Describes steps to convert a database from 3.x to 4.x in Optimizely Configured Commerce.

The database for Optimizely Configured Commerce 4.x has significant differences as compared to 3.x databases. The basic steps will include:

- Upgrade the database to the most current 3.71 release

- Use SQL Compare or other tool to compare it against the "gold standard" 3.71 database (Insite.Commerce.Golden371) to get it synched up with the expected look of the 3.71 database

- Apply the PreBatch script (4.0_Master_Prebatch) - should execute without failure

- Apply the Batch Synch script (4.0_Master_BatchSynch) - this should execute without failure but may need some "help"

- Apply the PostBatch script (4.0_Master_PostBatch) - this should execute without failures

- Set any application settings as needed, especially if you are going to use the db as a pilot/test db

NoteThe scripts mentioned in the above steps can be found under the folders called \InsiteCommerceDatabase\4xUpgrade within the 4.2 SDK, located within the Optimizely support portal.

Before you start

- You will likely run through this process at least twice - the first time to convert a live site to a new pilot/test version and the second time to take the site live.

- You will most likely want to get a local copy of the database for the initial conversion

- Make good notes on the problems with the data and save the compare script (.scp file) from SQLCompare so that it will be easier to upgrade the site the next time

- You may consider cleaning up the errant data on the production site prior to converting to live as well

- Make sure you have a good, current backup of your database in case of problems during the process it may be best to roll back and start over

- Make sure you have plenty of disk space - because many of the tasks are bounded in a single transaction, the log file will grow very large. This allows the scripts to be rerun in case there is a problem but it does take longer and consumes log space.

- Know that duplicate records is the largest problem to resolve during conversion.

Step 1 - Upgrade the db to 3.71

For the batch sync process (which is over 20,000 lines of SQL), the preferred way to handle this process is to start with a "good" 3.71 db that conforms to our standards such that the upgrade to 4.0 will be seamless.

-

Start with pulling in the target database to a server you can access locally

-

Start up a project that us using the most current 3.71 DLLs

-

Run the project which will automatically apply the db scripts through all previous versions

-

Modify the connectionStrings.config file in the InsiteCommerce.Web project to point to the db to be upgrade

-

Reset IIS if the project has ever been run to take the config changes

-

If running locally, if Code Contracts are not loaded, you can ignore the error messages

-

You will likely get an error indicating that localhost is not a valid website - this is fine and expected - the db changes would have been applied - you can tell by looking at a refreshed list of files and look for table ContentItem which did not exist prior to 3.71

-

Validate that the scripts all ran by looking in the ApplicationLog either through the M/C or by using the following:

SELECT \* from ApplicationLog where Source = 'LoadDbChanges' and LogDate \>= '2015-07-05'to get a list of any scripts that did not get applied and remediate to ensure that all scripts get applied

-

Step 2 - SQL compare to conform the db to the "golden" db

The instructions that follow are for using SQL Compare to get the 3.7 upgraded database to conform to the expected, "golden" version. One has to take great care to review things and make sure we are not dropping custom extensions. The intent is that by comparing the actual 3.71 db to the golden version, there should be very limited changes.

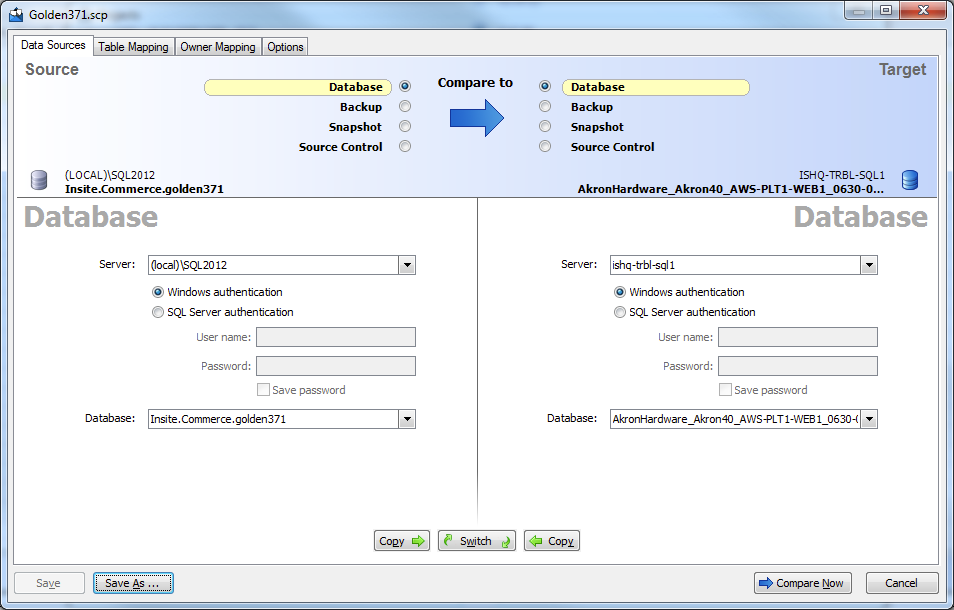

- You can start with the existing Golden371.scp file or set up your own project

- The compare is FROM the golden 371 db TO the db you want to upgrade (conforming the customer db to the target format)

- In terms of Options, we have the following checked on:

- Behavior

- Decrypt encrypted objects

- Ignore

- Permissions

- Whitespace

- User's permissions and role memberships

- Statistics

- Fill factor and index padding

- Filegroups, partition schemes and partition functions

- User properties

- WITH element order

- Database and server name in synonyms

- Behavior

Select the correct database as the TO database (the one you are working with)

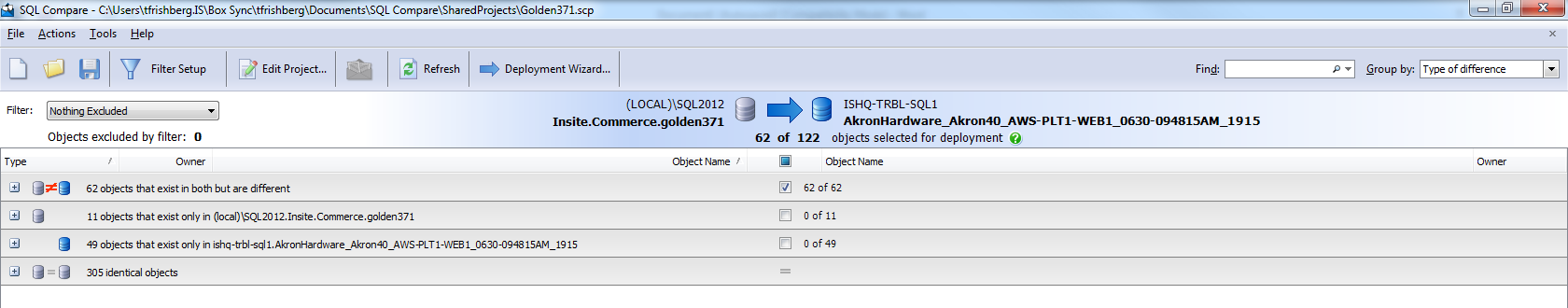

Click Compare Now - you will get something that looks like the following:

You will want to open up each of the item groups to determine its proper disposition

Different objects

- Most of the differences will likely be constraints and constraint names - in general you should be able to apply all changes in the objects that are different to ensure that the db conforms to the standard/golden 3.71 and will convert up. To the extent that there is something custom in the db, that should NOT be conformed or it will be lost.

- There is no particularly easy way to do this - go through these one at a time. Even indexes may not be valid any longer if, for example, you are going from a site using SQL faceting to Lucene.

- Some of the differences may need to be checked in the db - for example, I ran into a db where Company.WebSiteId was set as NULLable and in the golden db it is not nullable - I manually checked that the script would not error out because the data had nulls in the field.

- Another example was a field called Customer.CustomLandingPage which is not in the golden version - I checked to ensure there was no valid value other than NULL and empty string.

- Custom tables such as "batch" integration tables can come over as is and cleaned up after the upgrade or they can be removed prior to - this is up to the person conducting the transition.

- Record questions that may arise in a document to evaluate with the implementation and/or integration specialist. This will apply mostly to custom fields added to standard tables which is NOT a recommended process.

- Make sure NOT to select Users to synch or they will be deleted - only tables, stored procedures, functions, and schema

Objects that exist only in to DB

- For any tables, stored procedures, or functions, make sure that these are applied - these are the new things that were missed in the upgrade - there should be very few

- Do NOT transfer users from the golden db to the live db

Objects that exist only in from DB

- Scan each one and for tables, check to see if they have any data

- If they don't have any data, it is a good candidate to mark for synch which will effectively delete them. Remember that if something exists only in the FROM db, marking it to synch will effectively remove it.

- Stores Procedures

- Most likely to leave these alone and let them come over - it would be good practice to remove them once you've confirmed they are no longer used in custom code

- Users

- Mostly leave the users unchecked and let them come over - if there are obsolete users, now is a good time to eliminate them by checking them on for synch

Click the deployment wizard

-

Create a deployment script

- Back up the target if you wish or do it manually

- Check the "deploy all dependencies" option

- Click Next

- Review the warnings to see if you missed anything and make changes as needed - this set should tell you the possible problems

- Click the Deployment Script table and copy script to the clipboard

- Paste the script into the SQL Management Studio

-

Run the Script

-

Make sure you are selected to the correct db

-

Make sure you have a good backup of the database

-

Run the script

- If there are errors, the way the script is written, you should be able to simply fix the script and re-run it

- An example may be that there is some code that is not pertinent to the current site such as a stored procedure for another ERP

- You may run into unique indexes being added that show up duplicate records - these must be fixed up in order for the system to run - this will be a much bigger issue moving into the 4x db since we've added a lot more unique constraints to tables with natural keys. You can delete and/or locate these duplicates using scripts such as the following:

DELETE FROM ApplicationMessage WHERE applicationmessageId IN ( SELECT applicationmessageId FROM ( SELECT applicationmessageId ,ROW_NUMBER() OVER (PARTITION BY Name ORDER BY applicationmessageId) AS [ItemNumber] FROM ApplicationMessage ) a WHERE ItemNumber > 1 -- Keep only the first unique item ) -

You can either just select the SELECT section to find them or select the entire thing to delete. An even easier method is to wrapper it in a BEGIN TRANSACTION/ROLLBACK until you are satisfied and then eliminate it or change ROLLBACK to COMMIT

-

Steps 3-5

- Apply the PreBatch script (4.0_Master_Prebatch)

- If you receive any errors, you must identify what they are and get the data cleaned up to avoid them. The script was generated to try to avoid these problems and remove duplicate records where we found common issues but these may crop up again in the BatchSynch script.

- You may get a list of duplicate AttributeValues (showing FilterValueId, FilterSectionId, and Value) - these are a bit nasty to fix, but they need to be fixed prior to running the BatchSynch script.

- Essentially you need to query the db for anything with the same sectionID and Value and determine which items are associated to each - generally you will find that one of them is assigned and the other isn't so you can simply delete the one with nothing associated with it.

- If, however, you find products associated with multiple filter section/value combinations, you will need to pick one as the 'master' and update them to the new FilterValueId before you delete the filter section value.

- Because the master synch will do everything in a single transaction, the transaction log will get very large for this operation. You may want to go in an adjust the file settings to allow it to grow by bigger increments than normal for this process.

- Apply the Batch Synch script (4.0_Master_BatchSynch) - this should execute without failure but may need some "help"

- We may not have synched up all the constraints - this script tries to drop all constraints it wants to rename so if it tries to drop a constraint that is not valid it will error out. The easiest way to fix it is to find it and delete the ALTER TABLE code along with the IF @@Error<>0 SET NOEXEC ON code

- The other problem you may encounter are duplicate records on tables where we have added unique index constraints. If you encounter this, you can either handle each record as it comes or use the scriptlet from above to identify and remove duplicates.

- Continue down this path until the full script executes

- Apply the PostBatch script (4.0_Master_PostBatch) - this should execute without failures

- There really should be no problems in

- Optionally you may go in and shrink the database back down and return the log file growth size to its original size to free up the large amount of space taken during this upgrade process.

Step 6 - Application/website configuration settings

The following are application settings or website configurations you should review to ensure they conform well to 4x. Some settings are important to change for pilot/testing as noted

Type | Setting | Value |

|---|---|---|

App Setting | AutoApplyPromotions | s/b set to False in 4x - the code will call the recalculate as needed |

App Setting | AutoCalculateTax | s/b set to False in 4x - the code will call the recalculate as needed |

App Setting | Configured Commerce Mode | Set to Development for testing (I believe this is now obsolete) |

App Setting | PaymentGateway | s/b set to Dummy for pilot/testing |

App Setting | PriceCalculator | Review to see what it should be set to for the ERP/custom to be used |

App Setting | ProductSearchProvider | Probably should be Lucene if upgrading from an older site |

App Setting | StoreEncryptedCC | Should always be set to FALSE |

App Setting | TaxCalculator | Likely to set to Generic for pilot but may set to a live tax service |

Website | PricingService | Determine correct value if changing moving to 4.0 |

Finalize

- It is wise to shrink the logfile back down before you take a backup of the db and move it back into a production environment or just shrink it down in general.

- Right-click the database in the Databases list in the SQL Management Studio's object explorer

- Select Tasks | Shrink | Files

- Select the FileType as Log, shrink action is defaulted to Release unused space

- Say ok - this will clear out and reset the log file

- Back up the database, zip it up and move it to the targeted location, unzip it and restore it

- You may have to manually change the WebSite.DomainName if the pilot name of the site has changed

- If you are restoring to a new named instance, you will have another issue with the user since the one in the db is from the old database, you will have to set up a new security user with the new name and membership of dbo to match up so that you can access the new site.

- Once you can successfully get into the site, check the application logs to ensure that any additional 4x scripts ran successfully.

Updated 6 months ago