Analyze results

How to analyze the results of your experiments using the Reports page for Optimizely Feature Experimentation.

The reports page helps you interpret experiment metrics using Optimizely's Stats Engine, a unique approach to statistics for the digital age developed in conjunction with leading researchers at Stanford University. Stats Engine embeds innovations combining sequential testing and false discovery rate control to deliver speed and accuracy for businesses making decisions based on real-time data.

Note

Previous versions of Feature Experimentation, named Full Stack, Optimizely Web Experimentation, and Optimizely Performance Edge refer to the reports page as the results page. Both pages show your experiment results, but the Optimizely Feature Experimentation version of reports shows all experiment results across all environments.

When you run an experiment, explore the reports page to learn how users respond to your experiment.

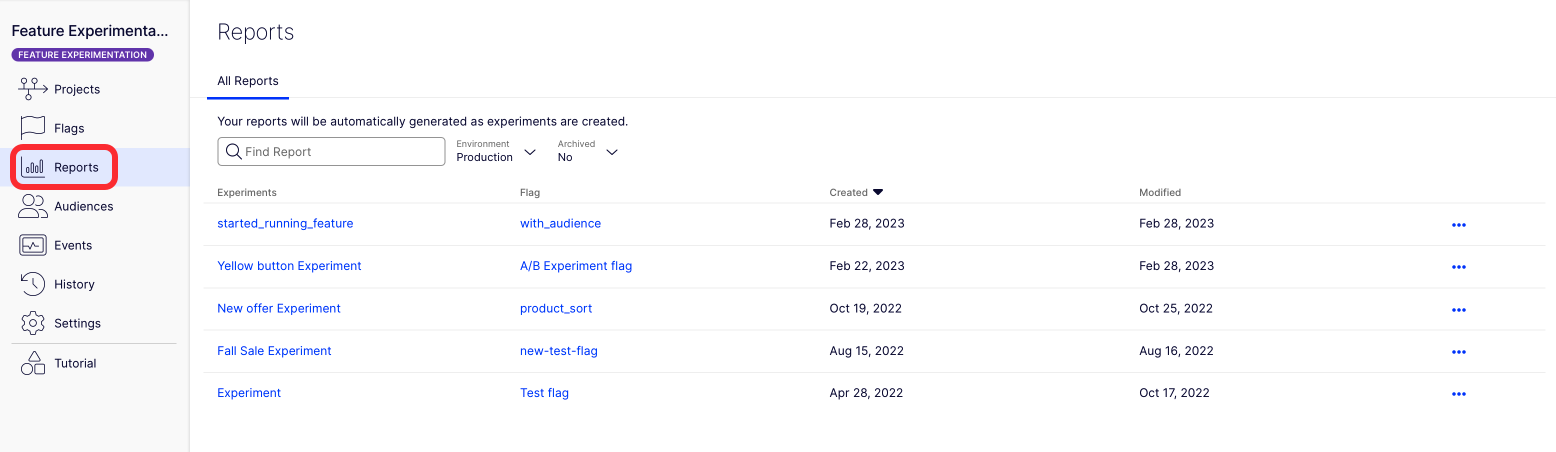

the Reports page

For information, see the following:

- The Experiment Results page.

- How Optimizely calculates results.

- Statistical Significance at Optimizely Experimentation.

- To get to meaningful stats sooner, use Stats Accelerator.

Optimizely Feature Experimentation A/B tests do not currently support retroactive results calculation.

Note

When you use deliveries, Optimizely Feature Experimentation does not send an impression to the reports page, this is because deliveries are for rolling out flags that are already tested in experiments. To measure the impact of a feature flag, run an experiment instead.

Segment results

Segment your results to see how different groups of users behave compared to users overall.

By default, Optimizely Feature Experimentation shows results for all users who enter your experiment. However, not all users behave like your average users. Optimizely Feature Experimentation lets you filter your results so you can see if certain groups of users behave differently from your users overall. This is called segmentation.

For example, imagine you run an experiment with a pop-up promotional offer. This generates a positive lift overall, but when you segment for users on mobile devices, it is a statistically significant loss. Maybe the pop-up is disruptive or difficult to close on a mobile device. When you implement the change or run a similar experiment in the future, you might exclude mobile users based on what you have learned from segmenting.

Segmenting results is one of the best ways to gain deeper insight beyond the average user's behavior. It is a powerful way to step up your experimentation program.

Note

Segments and filters should only be used for data exploration, not making decisions.

Experiments do not include "out-of-the-box" attributes like browser, device, or location attributes (these attributes are included in Optimizely Web Experimentation. Optimizely Feature Experimentation's SDKs are platform-agnostic: Optimizely does not assume which attributes are available in your application or what the format is. In experiments, all segmentation is based on the custom attributes that you create.

Here is how to set attributes:

- Create the custom attributes that you want to use for results page segmentation.

- Target audiences based on your custom attributes.

- Pass the custom attributes into the user context for your experiment or app.

For an example, see the Create User Context topic in your language's SDK reference documentation:- Android SDK

- C# SDK

- Flutter SDK

- Go SDK

- Java SDK

- JavaScript SDK – SDK versions 6.0.0 and later.

- JavaScript (Browser) SDK – SDK versions 5.3.5 and earlier.

- JavaScript (Node) SDK – SDK versions 5.3.5 and earlier.

- PHP SDK

- React SDK

- React Native SDK

- Ruby SDK

- Swift SDK

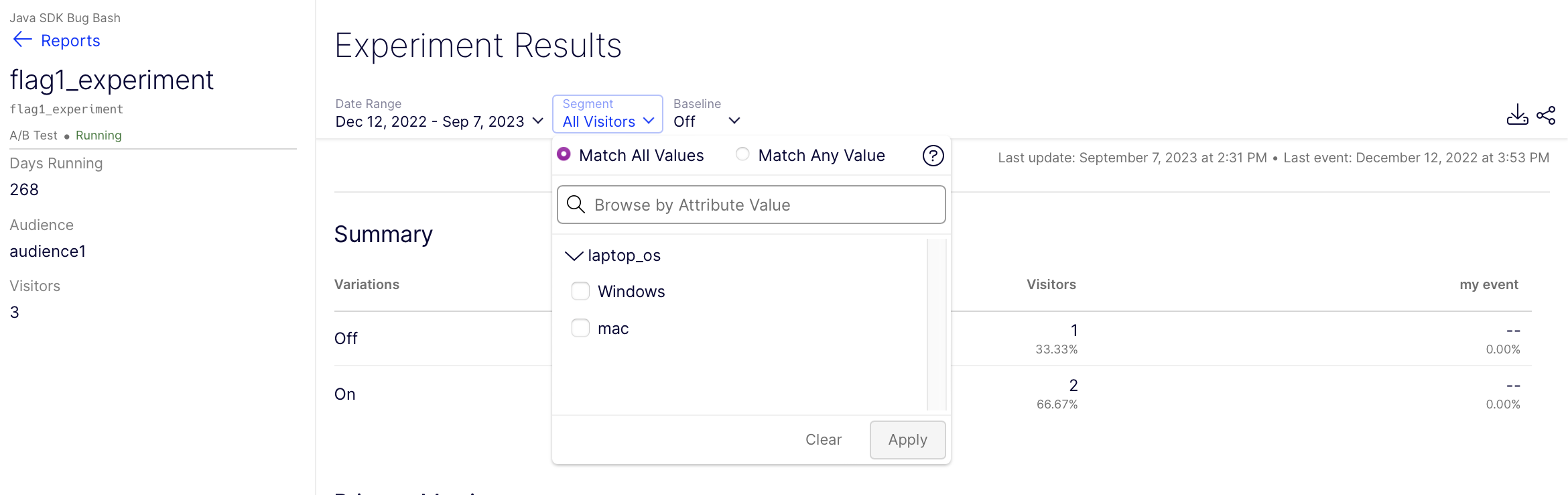

After you define custom attributes and pass them in the SDK, they will be available as segmentation options from the drop-down menu at the top of the reports page.

Interpret the reports page

You can segment your entire reports page or the results for an individual metric. Segmenting results helps you get more out of your data by generating valuable insights about your user.

- Navigate to your Reports page.

- Click Segment and select an attribute from the drop-down menu.

Note

For Optimizely Feature Experimentation to use attributes for segmentation, the attribute must be defined in the datafile, and it must be included in the user context object. However, it does not have to be added as an audience to the test.

When segmenting results, a user who belongs to more than one segment will be counted in every segment they belong to. However, if a user has more than one value for a single segment, the user is only counted for the last-seen value they had in the session.

See our support article on how visitors and conversions are counted in segments.

Updated about 1 month ago

See the following documentation for more information: