Optimizely Agent

Overview of Optimizely Feature Experimentation Agent.

Optimizely Agent is a standalone, open-source and highly available microservice that provides major benefits over using Optimizely Feature Experimentation SDKs in certain use cases. The Agent REST API Config Endpoint offers consolidated and simplified endpoints for accessing all the functionality of the Optimizely Feature Experimentation SDKs.

Example implementation

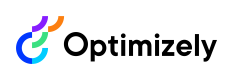

A typical production installation of Optimizely Agent is to run two or more services behind a load balancer or proxy. The service can be run with a Docker container, on Kubernetes through Helm, or installed from the source. See Conifgure Optimizely Agent for instructions on how to run Optimizely Agent.

Click to enlarge image

Reasons to use Optimizely Agent

Here are some of the top reasons to consider using Optimizely Agent instead of a Feature Experimentation SDK:

Service-oriented architecture (SOA)

If you already separate some of your logic into services that might need to access the Optimizely decision APIs, Optimizely Agent is a good solution.

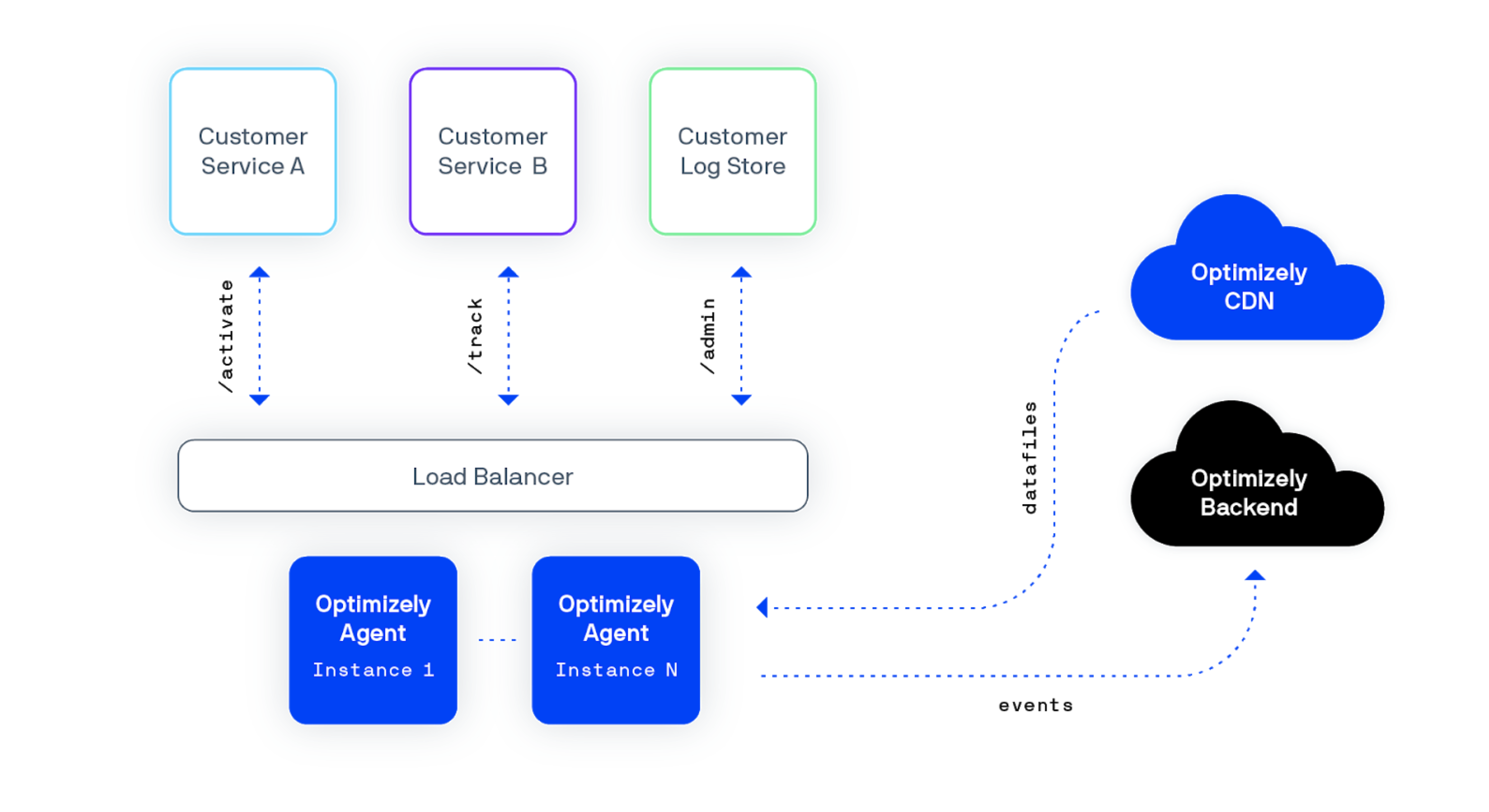

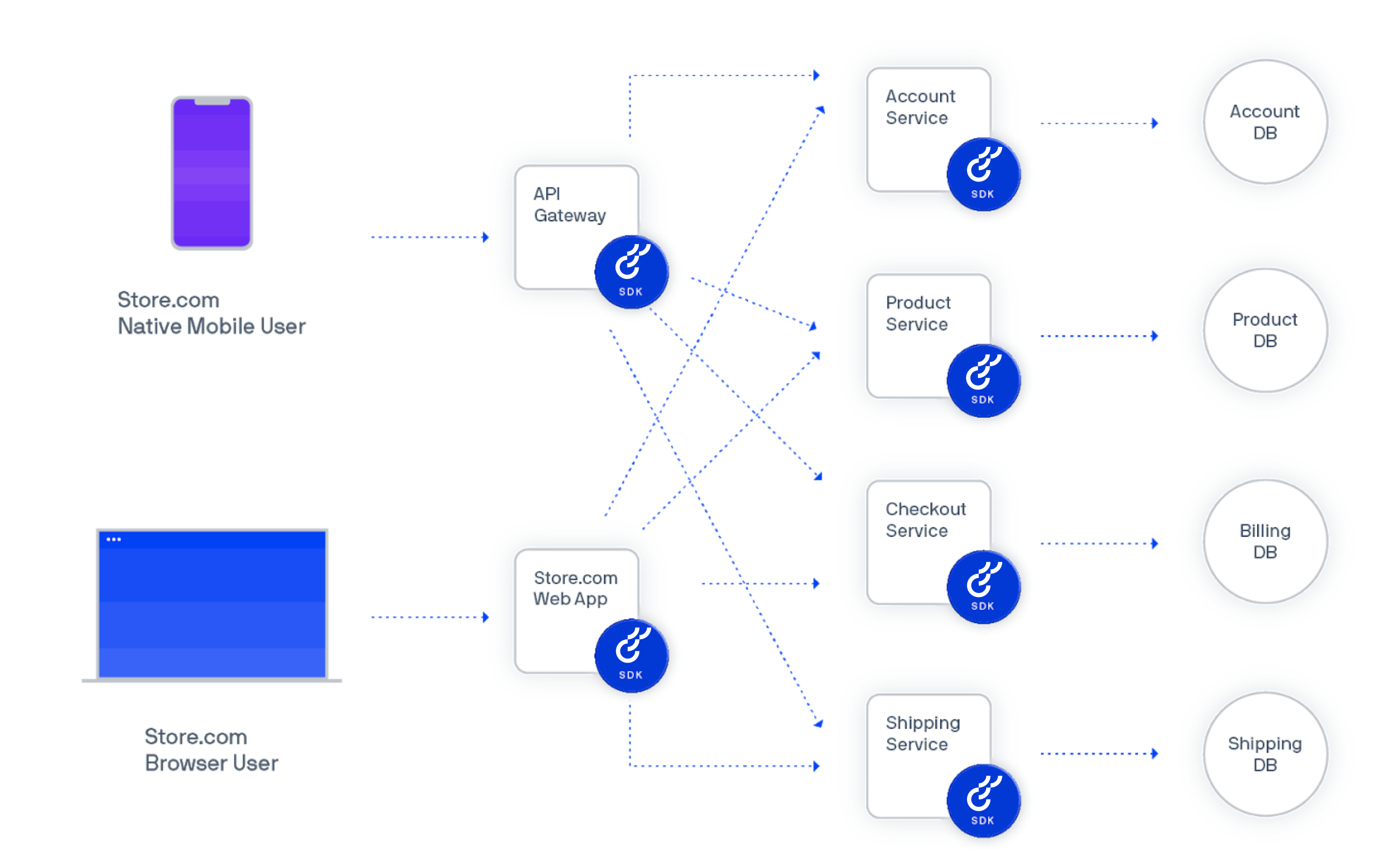

The following images compare implementation styles in a service-oriented architecture.

First without Optimizely Agent, which shows six SDK embedded instances.

A diagram showing the use of SDKs installed on each service in a service-oriented architecture

(Click to enlarge)

Now with Optimizely Agent. Instead of installing the SDKs six times, you create just one Optimizely instance. An HTTP API that every service can access as needed.

A diagram showing the use of Optimizely Agent in a single service

(Click to enlarge)

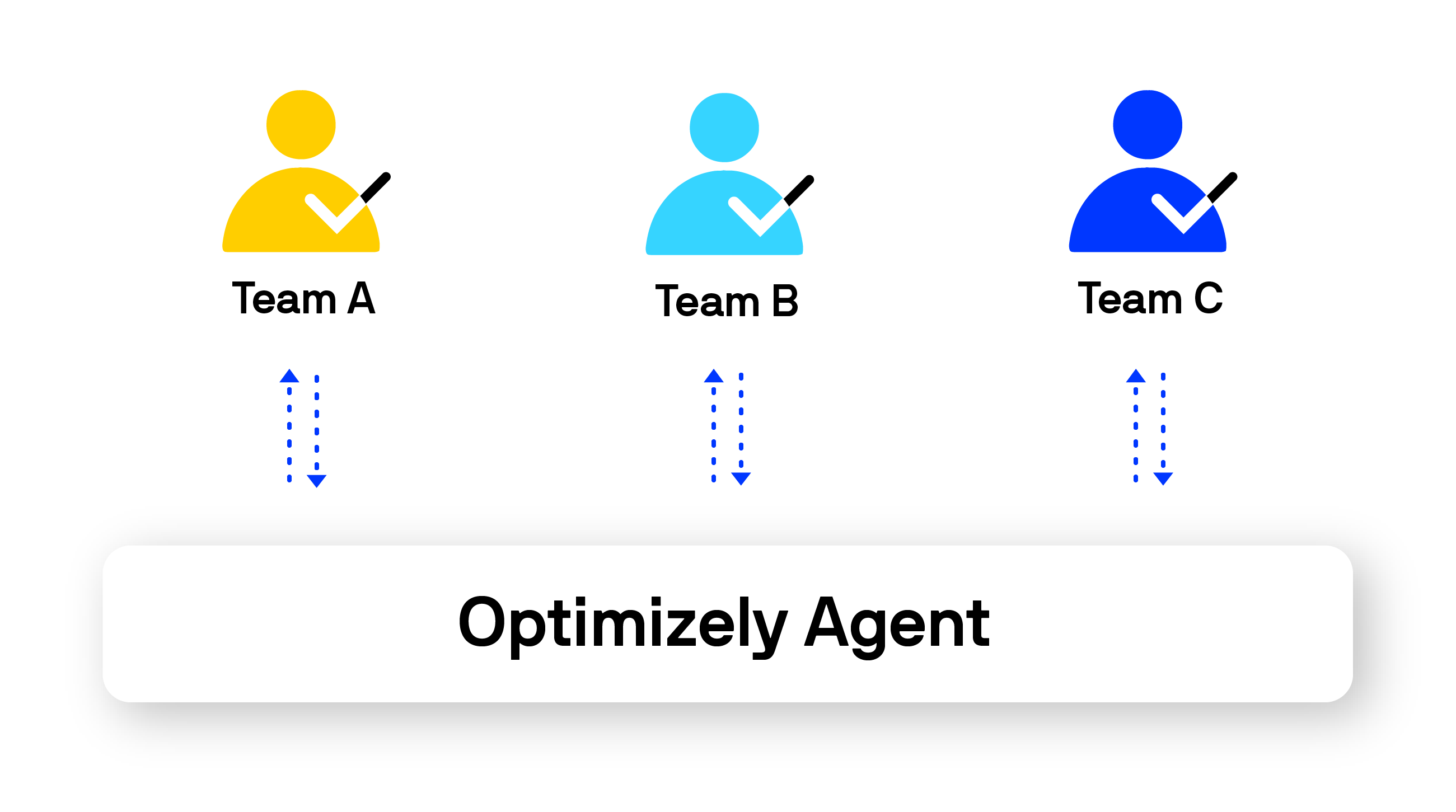

Standardized access across teams

Optimizely Agent is a good solution if you want to deploy Optimizely Feature Experimentation once and roll out the single implementation across many teams.

By standardizing your teams' access to the Optimizely service, you can better enforce processes and implement governance around feature management and experimentation as a practice.

A diagram showing the central and standardized access to the Optimizely Agent service across an arbitrary number of teams.

(Click to Enlarge)

Network centralization

You do not want many SDK instances connecting to Optimizely's cloud service from every node in your application. Optimizely Agent centralizes your network connection. Only one cluster of agent instances connects to Optimizely for tasks like update datafiles and dispatch events.

Preferred programming language is not offered as a native SDK

You are using a programming language not supported by a native SDK. For example, Elixir, Scala, or Perl. While you can create your own service using an Optimizely Feature Experimentation SDK of your choice, you could also customize the open-source Optimizely Agent to your needs without building the service layer on your own.

Considerations for Optimizely Agent

Before implementing Optimizely Agent, it is best to evaluate your configuration to ensure Agent aligns with your technical and business requirements. The following are some aspects to consider instead of outright reasons not to use Optimizely Agent. If you decide to not use Optimizely Agent, you can implement Optimizely's many open-source SDKs instead.

Latency

If virtually instant performance is critical, you should use an embedded Optimizely Feature Experimentation SDK instead of Optimizely Agent.

- Feature Experimentation SDK – Microseconds

- Optimizely Agent – Milliseconds

Architecture

If your app is constructed as a monolith, embedded SDKs might be easier to install and a more natural fit for your application and development practices.

Speed of getting started

If you are looking for the fastest way to get a single team up and running with deploying feature management and experimentation, embedding an SDK is the best option for you at first. You can always start using Optimizely Agent later, and it can even be used alongside Optimizely Feature Experimentation SDKs running in another part of your stack.

Important information about Agent

Scaling

Optimizley Agent can scale to large decision and event tracking volumes with relatively low CPU and memory specs. For example, at Optimizely, we scaled our deployment to 740 clients with a cluster of 12 agent instances, using 6 vCPUs and 12GB RAM. You will likely need to focus more on network bandwidth than compute power.

Load balancer

Any standard load balancer should let you route traffic across your agent cluster. At Optimizely, we used an AWS Elastic Load Balancer (ELB) for our internal deployment. This let us easily scale our Agent cluster as internal demands increase.

Datafile synchronization across Agent instances

Agent offers eventual consistency, rather than, strong consistency across datafiles.

Each Agent instance maintains a dedicated, separate cache. Each Agent instance persists in an SDK instance for each SDK key your team uses. Agent instances automatically keep datafiles up to date for each SDK key instance so that you will eventually have consistency across the cluster. The rate of the datafile update can be set as the configuration value OPTIMIZELY_CLIENT_POLLINGINTERVAL (the default is 1 minute).

If you require strong consistency across datafiles, you should use an active/passive deployment where all requests are made to a single vertically scaled host, with a passive, standby cluster available for high availability in the event of a failure.

Updated 6 days ago