Implementation checklist

Important configuration details and best practices to employ while using Optimizely Feature Experimentation.

Before implementing Optimizely Feature Experimentation in a production environment, review the configuration details and best practices checklist to simplify implementation.

Architectural diagrams

The following diagrams demonstrate how you, your users, and Optimizely Feature Experimentation interact. Understanding these interactions is crucial for implementing Feature Experimentation effectively. Click on any diagram to enlarge it.

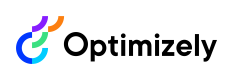

Overall architectural diagram

The overall architectural diagram provides a detailed view of Feature Experimentation's components and their interactions, illustrating how data flows between key entities. The diagram contains the following three main sections: Your implementation, Optimizely UI, and the Optimizely servers. It shows how these sections work together to support feature flagging and experimentation. It highlights interactions between components using Crow's foot relationship notation, a standard entity-relationship (ER) modeling technique that visually represents the types of relationships, such as 'one' or 'many,' between different parts of the application and event processing. See the key following the diagram.

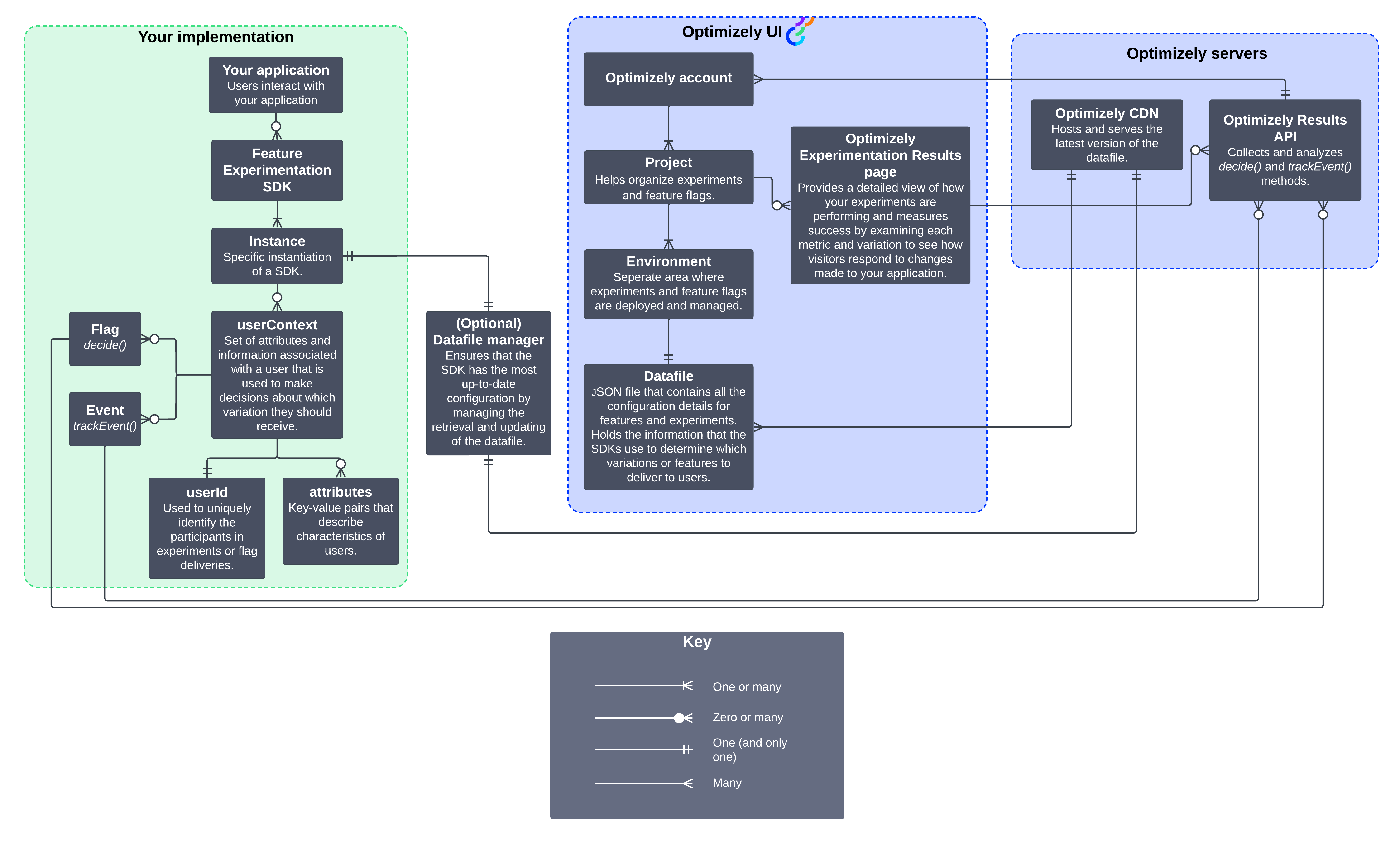

System context diagram

The system context diagram provides a broad overview of how the system interacts with external entities, including your users and third-party analytics systems. The diagram highlights key interactions, such as how applications send event data to Optimizely, how decision data flows back to Optimizely, and how integrations with external third-party analytics platforms enhance experimentation insights. This high-level overview explains the system's boundaries and its role within the broader experimentation and feature management ecosystem.

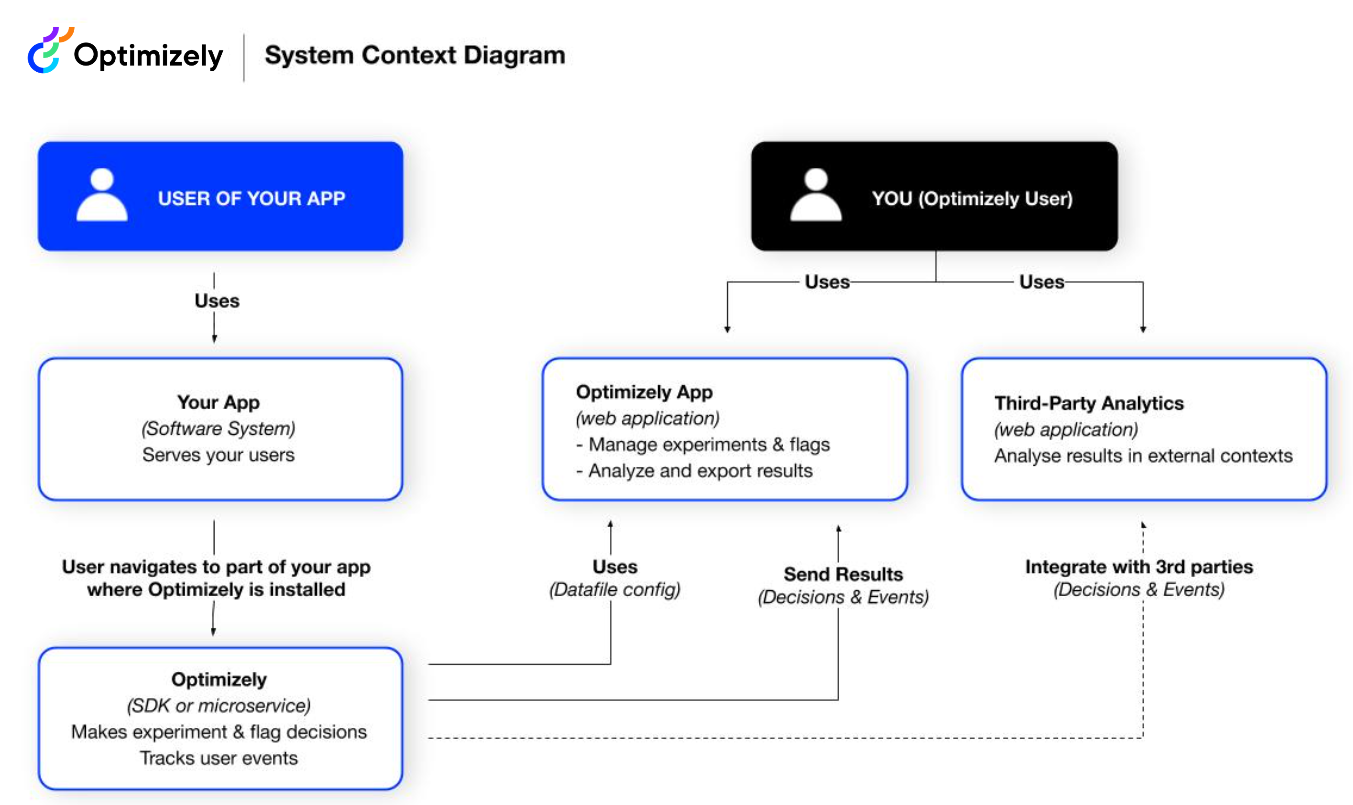

Feature Experimentation SDK and app container

The SDK and app container diagram shows how the Feature Experimentation SDKs integrate within an application to evaluate feature flags and experiments. It details the data flow between the SDK, the app container, and you, highlighting how you manage feature configurations, decision-making processes, and event tracking. Optimizely uses JSON over HTTPS for network requests, while JSON is used for internal data exchanges. These annotations clarify how information flows between components.

Datafile management

The datafile is a JSON representation of the OptimizelyConfig. It contains the data needed to deliver and track your experiments and flag deliveries for an environment in your Optimizely Feature Experimentation project.

You have the following options for syncing the datafile between your Feature Experimentation project and your application:

- Pull method (recommended) – The Feature Experimentation SDKs automatically fetch the datafile at a configurable polling interval.

- Push method – Webhooks fetch and manage datafiles based on application changes. Use this method alone or in combination with polling if you need faster updates.

- Custom method – Fetch the datafile using the Optimizely CDN datafile URL.

Other important considerations for datafile management include the following:

- Caching and persistence.

- Synchronization between SDK instances.

- Network availability.

NoteTo ensure webhook requests originate from Optimizely, secure your webhook using a token in the request header.

SDK configuration

The Feature Experimentation SDKs are configurable to meet the needs of any production environment. However, adequate scaling may require overriding some default behavior to better align with your application's needs.

Logger

Enable verbose logging. Each SDK’s default no-operation logger provides a custom logger framework. It is fully customizable and can support use cases like writing logs to an internal logging service or vendor. However, it is intentionally non-functional out-of-the-box. Create a logger that suits your needs and pass it to the Optimizely client.

For information, see the documentation for the logger and the SimpleLogger reference implementation.

Error handler

In a production environment, you must handle errors consistently across the application. The Optimizely Feature Experimentation SDKs let you provide a custom error handler to catch configuration issues like an unknown experiment key or unknown event key. This handler should let the application fail gracefully, maintaining a normal user experience. It should also send alerts to an external service, such as Sentry, to alert the team of an issue.

ImportantIf you do not provide a handler, errors do not surface in your application.

User profile service

Building a user profile service (UPS) helps maintain consistent variation assignments between users when test configuration settings change.

The Optimizely Feature Experimentation SDKs bucket users using a deterministic hashing function. This means that the user evaluates to the same variation as long as the datafile and user ID are consistent. However, when test configuration settings are updated (such as adding a new variation or changing traffic allocation), the user's variation may change and alter the user experience. See How bucketing works in Optimizely Feature Experimentation.

A UPS solves this by persisting information about the user in a datastore. At a minimum, your UPS should create a mapping of user ID to variation assignment. Implementing a UPS requires exposing a lookup and save function that returns or persists a user profile dictionary. This service relies on consistent user IDs across all use cases and sessions.

NoteYou should cache the user information after the first lookup to speed up future lookups.

For example, using Redis or Cassandra for the cache, you can store user profiles in a key-value pair mapping. You can use a hashed email address mapping to a variation assignment. To keep sticky bucketing for six hours, set a time to live (TTL) on each record. As Optimizely buckets each user, the UPS interfaces with this cache and makes reads and writes to check assignments before bucketing normally.

Build an SDK wrapper

Many developers use wrappers to encapsulate SDK functionality and simplify maintenance. You can do this for the previous configuration options.

Environments

Optimizely Feature Experimentation's environments lets you confirm behavior and run tests in isolated environments, like development or staging. This makes it easier to deploy tests in production safely. Environments are customizable and should mimic your team's workflow. Most customers use two environments, development and production. This lets engineering and QA teams inspect tests safely in an isolated setting while site visitors are exposed to tests running in the production environment.

View production as your real-world workload. A staging environment should mimic all aspects of production so you can test before deployment. In these environments, all aspects of the SDK, including the dispatcher and logger, should be production-grade. In local environments like test or development, it is okay to use the out-of-the-box implementations instead.

Environments are kept separate and isolated from each other with their own datafiles. For added security, Optimizely Feature Experimentation lets you create secure environments, which require authentication for datafile requests. The Feature Experimentation server-side SDKs support initialization with these authenticated datafiles. You should use these secure environments only in projects that exclusively use server-side SDKs and implementation. If you fetch the datafiles in a client-side environment, they may become accessible to end-users

User IDs and attributes

User IDs identify the unique users in your tests. In a production setting, it is especially important to carefully choose the type of user ID and set a broader strategy for maintaining consistent IDs across channels. See Handle user IDs.

Attributes let you target users based on specific properties. In Feature Experimentation, you can define which attributes should be included in a test. Then, in the code itself, you can pass an attribute dictionary on a per-user basis to the SDK, which will determine which variation a user sees.

NoteAttribute fields and user IDs are always sent to Optimizely’s backend through impression and conversion events. You must responsibly handle fields (for example, email addresses) that may contain personally identifiable information (PII). Many customers use standard hash functions to obfuscate PII.

Integrations

Build custom integrations with Optimizely Feature Experimentation using a notification listener. Use notification listeners to programmatically observe and act on various events within the SDK and enable integrations by passing data to external services.

The following are a few examples:

- Send data to an analytics service and report that user_123 was assigned to variation A.

- Send alerts to data monitoring tools like New Relic and Datadog with SDK events to better visualize and understand how A/B tests can affect service-level metrics.

- Pass all events to an external data tier, like a data warehouse, for additional processing and to leverage business intelligence tools.

QA and test

Before you go live with your experiment, review the following documentation:

- QA and troubleshoot

- QA checklist

- Choose QA tests

- Allowlist

- Use a QA audience

- Use forced bucketing

- Troubleshoot

- History

If you have questions, contact Support. If you think you have found a bug, file an issue in the SDK’s GitHub repository.

Updated 6 months ago