Updated Feature Experimentation application UI

Explains the differences in the Optimizely application user interface between Full Stack Experimentation legacy and Feature Experimentation.

NoteFor a full side-by-side comparison of the Full Stack Experimentation (legacy) and Feature Experimentation refer to our user documentation page on migration examples.

Experiments and Features in one tab

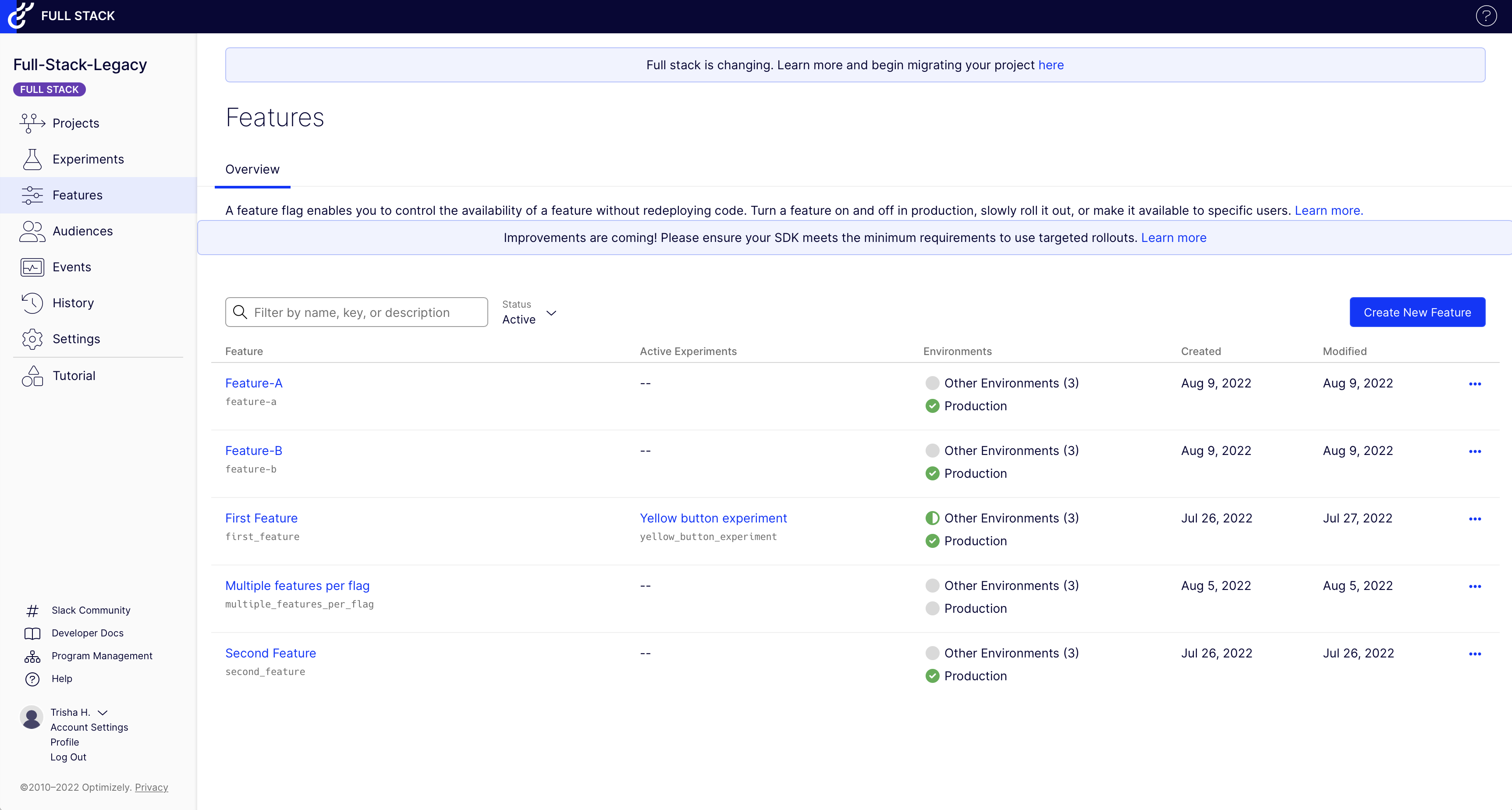

The Experiments and Features tabs were replaced with one Flags tab. This is the central dashboard for your flags and their rules. Everything starts with a flag, whether running an experiment or rolling out a feature. See Custom Flags Dashboard.

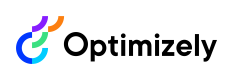

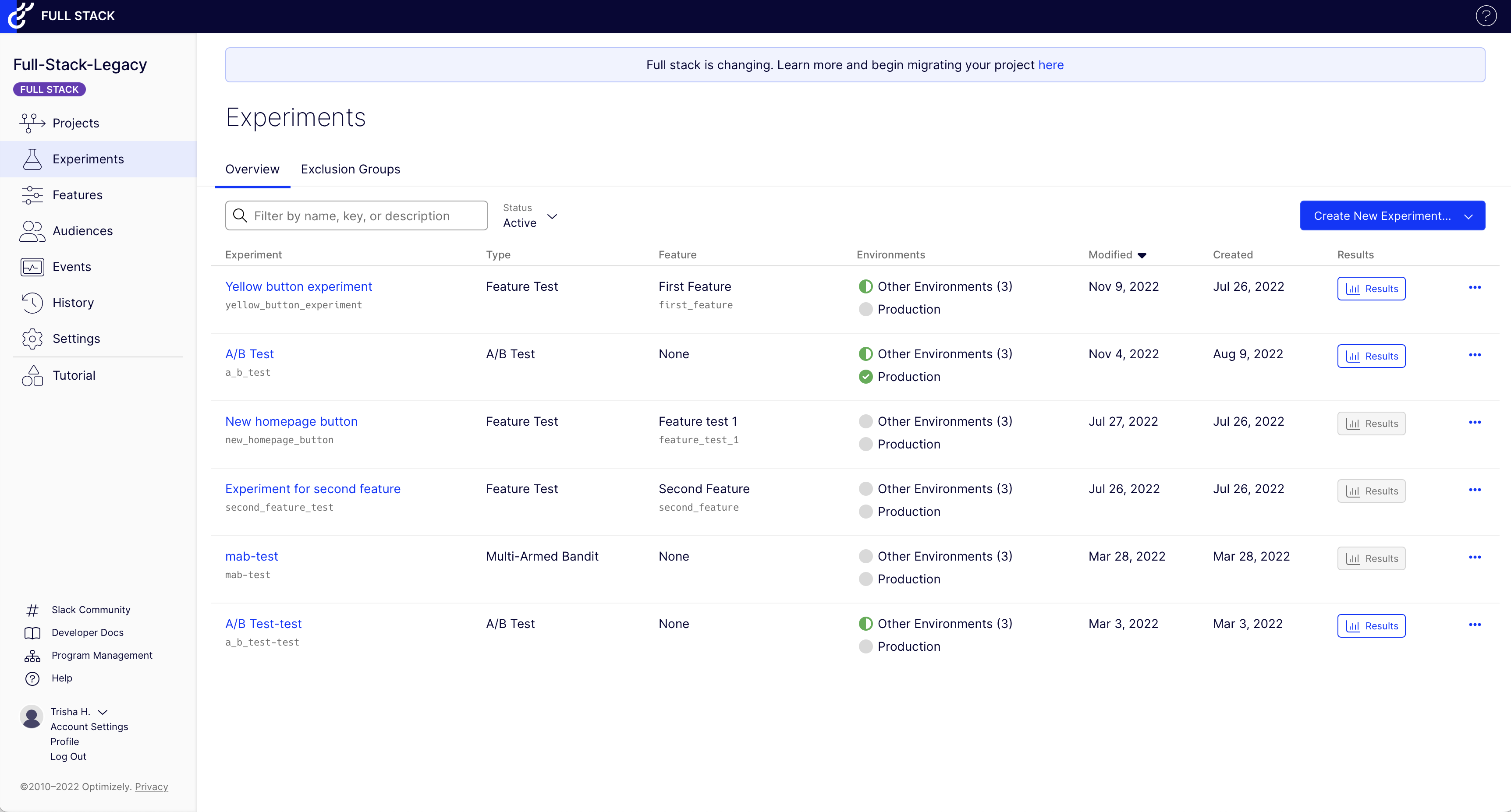

Full Stack Experimentation - Legacy

Experiments page

Features page

Feature Experimentation

Custom Flags Dashboard

Flag details

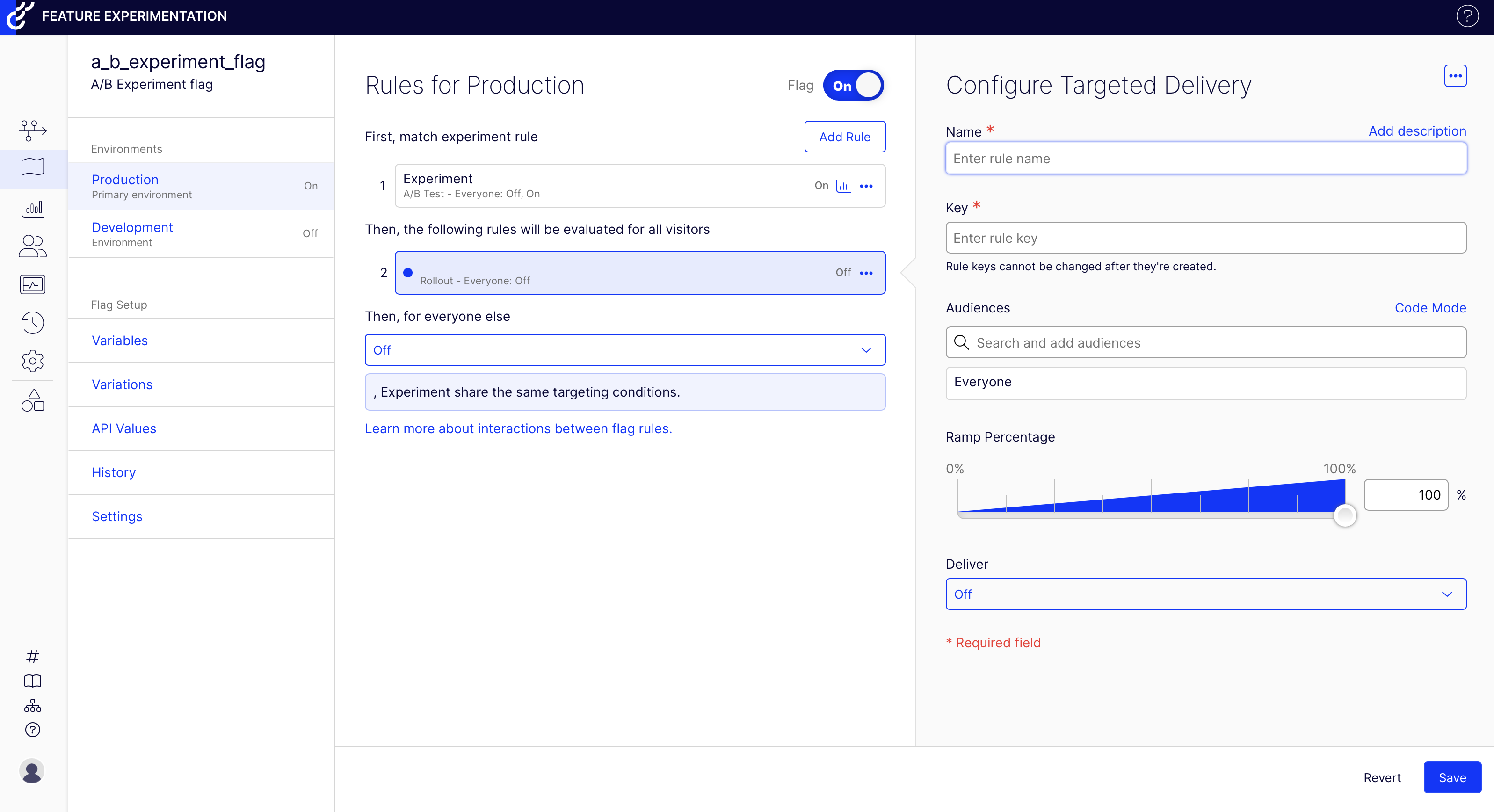

The new Flag Details page is where you can manage a flag's ruleset. The ruleset uses sequential logic to deliver your targeted deliveries or experiments. Aside from all experiments now being tied to a flag, all other components for creating experiments (audiences, metrics, and variations) remain the same as in the Full Stack (Legacy) experience.

You can create multiple rules for the same flag, providing flexibility in the experiences you deliver to users. Rules are evaluated sequentially, allowing you to maximize customer experience, given your priorities.

For example, you can prioritize your qualifying traffic to a flag for an experiment and delegate non-qualifying traffic to a default experience or targeted delivery. Because all experiments are based on flags, all winning variations in Feature Experimentation can be rolled out without needing to go through your entire software development lifecycle, including an extra deployment.

New flag details page

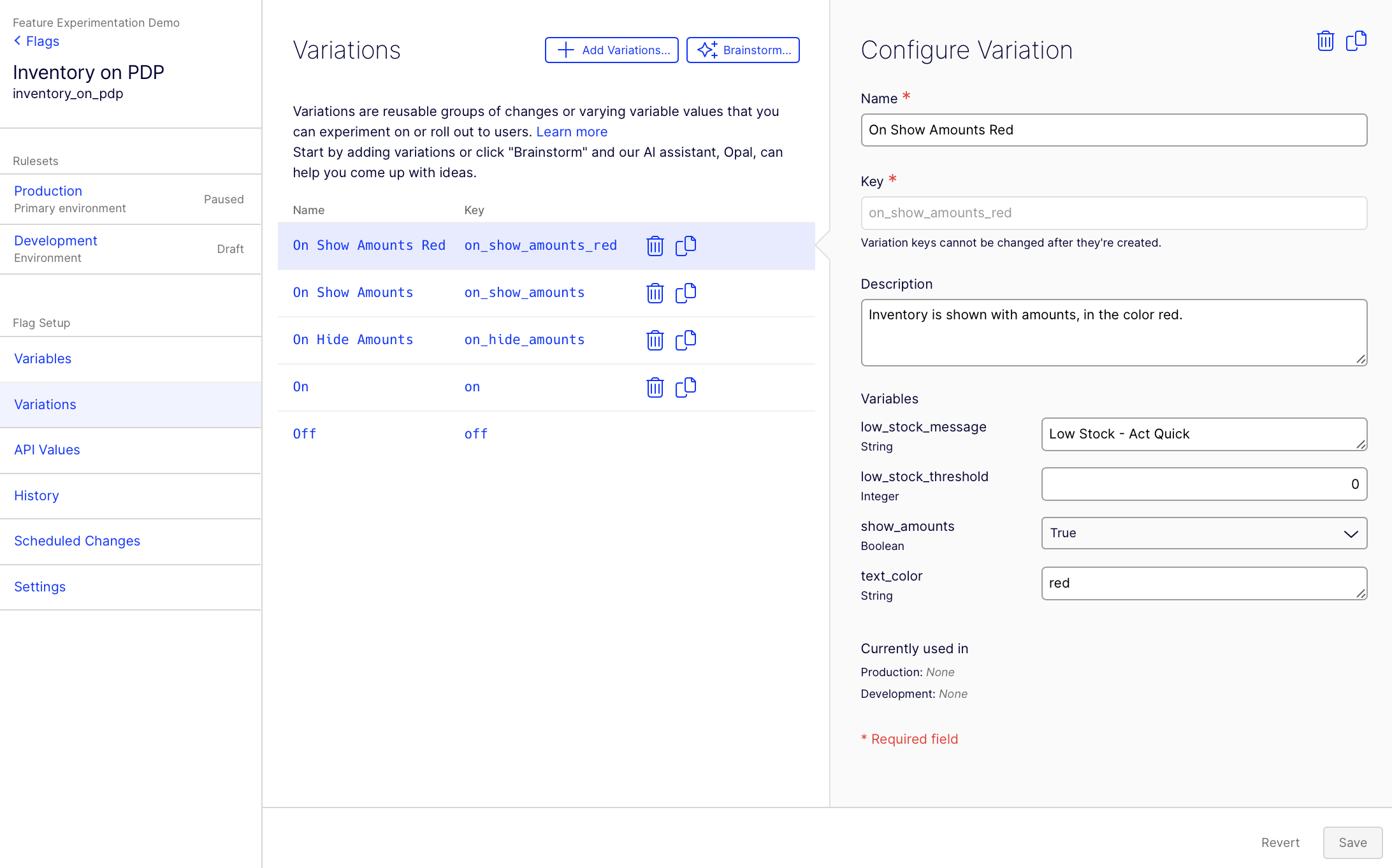

Shared variations

Variations and Variables are now shared across all rules in a flag, making it easy to reuse variations across your rules.

Feature Experimentation Variations page

Different results per environment

In Full Stack (Legacy), a single experiment exists across all environments and shares one results page. When you run quality assurance (QA) on an experiment, those events get mixed with the live production results. In Feature Experimentation, experiment rules are scoped by the environment, so results are also scoped by the environment. This eliminates a common pain point wherein events created in QA are mixed with live production results and the need to reset results before running the experiment in your primary environment. Refer to Migrate existing experiment results for information.

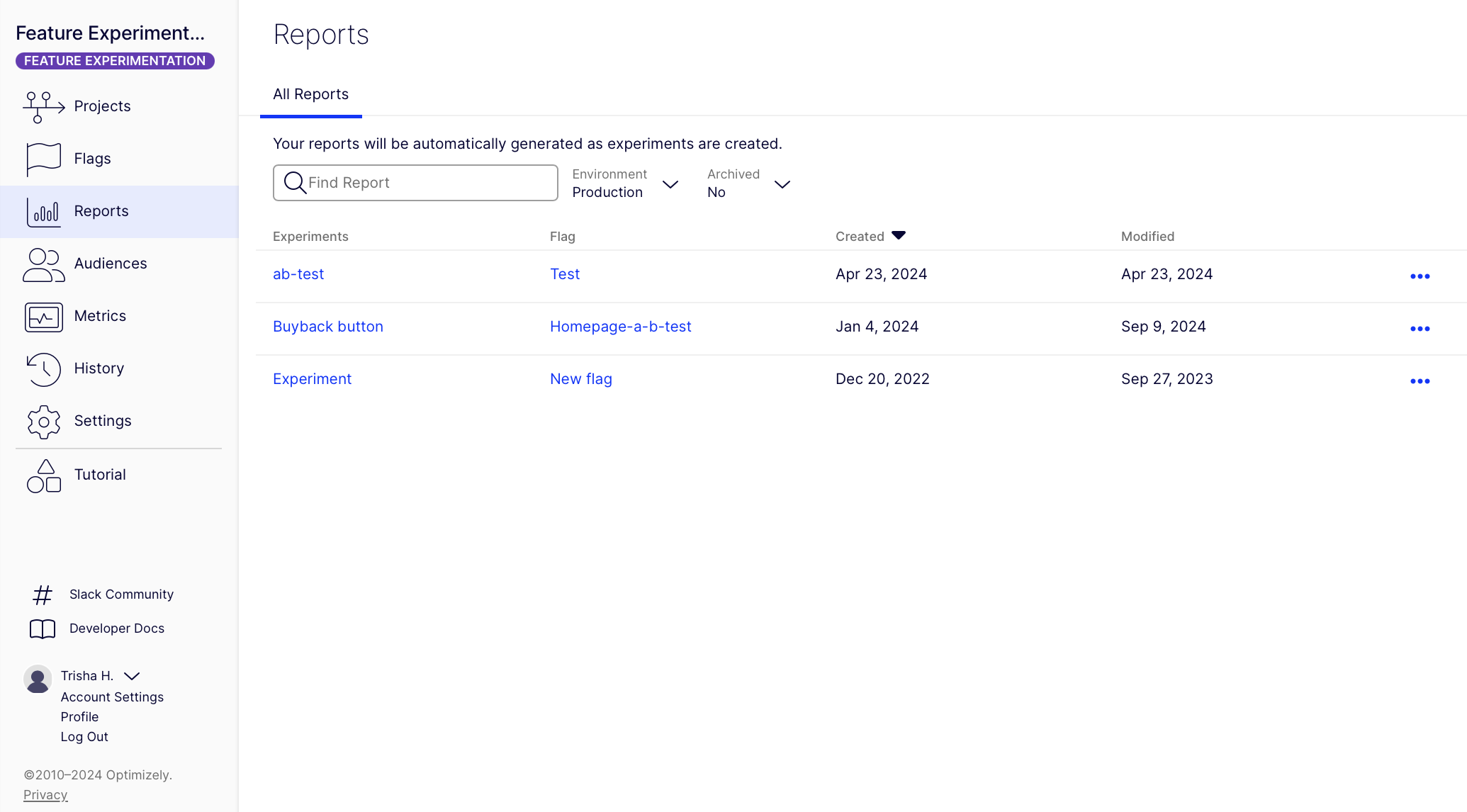

Reports page

In Full Stack Experimentation (Legacy), experiment results can only be viewed at the experiment level, requiring clicking view results for each experiment and not providing a centralized view of your results.

Feature Experimentation introduces a new Reports tab, where you can access results for your experiments from one view. Reports are displayed for one environment at a time and can be changed with the filter options.

Feature Experimentation Reports page

Updated about 20 hours ago