How bucketing works

Overview of how Optimizely Feature Experimentation assigns users to a flag variation.

Bucketing is the process of assigning users to a flag variation according to the flag rules. The Optimizely Feature Experimentation SDKs evaluate user IDs and attributes to determine which variation they should see.

Bucketing is:

- Deterministic – A user sees the same variation across all devices they use every time they see your experiment, thanks to how we hash user IDs. In other words, a returning user is not reassigned to a new variation.

- Stickyunless reconfigured – If you reconfigure a "live," running flag rule, for example by decreasing and then increasing traffic, a user may get rebucketed into a different variation. If you are using Stats Accelerator with Optimizely Feature Experimentation, you can ensure sticky user variation assignments by implementing a user profile service.

How users are bucketed

Optimizely relies on the MurmurHash function during bucketing to hash the user ID and experiment ID to an integer that maps to a bucket range representing a variation. MurmurHash is deterministic, so a user ID always maps to the same variation as long as the experiment conditions do not change.

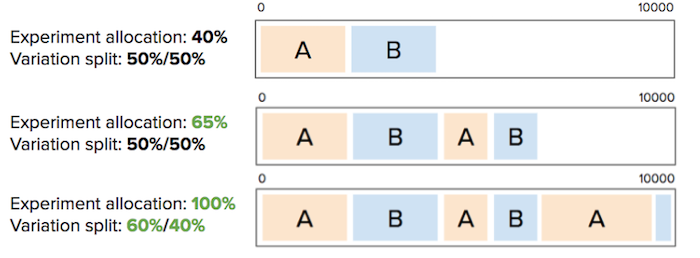

For example, imagine you run an experiment with two variations (A and B), with an experiment traffic allocation of 40% and a 50/50 distribution between the two variations. Optimizely assigns each user a number between 0 and 10000 to determine if they qualify for the experiment and which variation they see. If they are in buckets 0 to 1999, they see variation A; if they are in buckets 2000 to 3999, they see variation B. If they are in buckets 4000 to 10000, they do not participate in the experiment at all. These bucket ranges are deterministic: if a user falls in bucket 1083, they are always in bucket 1083.

This operation is highly efficient because it occurs in memory, and there is no need to block a request to an external service. It also permits bucketing across channels and multiple languages and experimenting without strong network connectivity.

Changing traffic can rebucket users

Increasing "live" traffic for an experiment is the most common way to change it. In this scenario, you can increase overall traffic without rebucketing users.

However, if you decrease then increase traffic, your users are rebucketed. You should avoid decreasing then increasing traffic in a running experiment because it can result in statistically invalid metrics. If you need to change "live" experiment traffic, do so at the beginning of the experiment.

Example

Here is a detailed example of Optimizely's attempt to preserve bucketing if you reconfigure a running experiment.

Imagine you are running an experiment with two variations (A and B), with a total experiment traffic allocation of 40% and a 50/50 distribution between the two variations. If you change the experiment allocation to any percentage except 0%, Optimizely ensures that all variation bucket ranges are preserved whenever possible so that users are not rebucketed into other variations. If you add variations and increase the overall traffic, Optimizely tries to put the new users into the new variation without rebucketing existing users.

If you change variation traffic from 40% to 0%, Optimizely does not preserve your variation bucket ranges. After changing the experiment allocation to 0%, if you change it again to 50%, Optimizely starts the assignment process for each user from scratch: Optimizely does not preserve the variation bucket ranges from the 40% setting.

End-to-end bucketing workflow

The following table highlights how various user-bucketing methods interact with each other:

User bucketing method | evaluates after these: | evaluates before these: |

|---|---|---|

Forced variation |

|

|

User allowlisting |

|

|

User profile service |

|

|

Exclusion groups |

|

|

Traffic allocation |

|

|

ImportantIf there is a conflict over how a user should be bucketed, then the first user-bucketing method to be evaluated overrides any conflicting method.

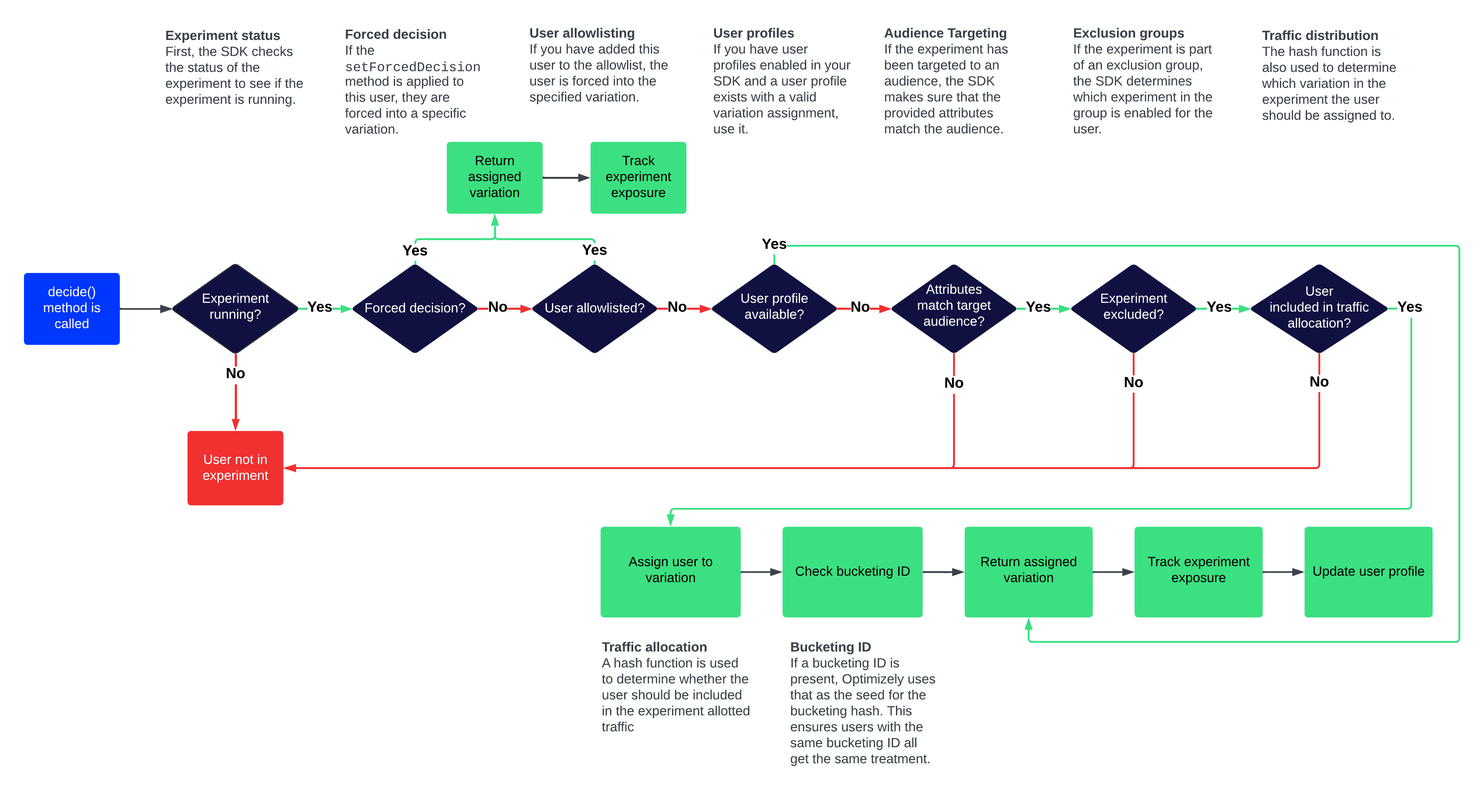

Here is how the SDK evaluates a decision. This chart serves as a comprehensive example that explores all possible factors, including QA tools like specific users and forced decisions.

-

The

Decide()call is executed and the SDK begins its bucketing process. -

The SDK ensures that the flag rule is running.

-

The SDK checks to see if a forced decision method is used. If one is used, the user is forced into a variation.

-

If the user is in an experiment rule, the SDK compares the user ID to the Allowlist. The specific users in the list are forced into a variation.

-

If provided, the SDK checks the User Profile Service implementation to determine whether a profile exists for this user ID. If it does, the variation is immediately returned and the evaluation process ends. Otherwise, proceed.

-

The SDK examines audience conditions based on the user attributes provided. If the user meets the criteria for inclusion in the target audience, the SDK will continue with the evaluation; otherwise, the user will no longer be considered eligible for the experiment.

-

The SDK examines any exclusion groups.

-

The hashing function returns an integer value that maps to a bucket range. The ranges are based on the traffic allocation breakdowns set in the Optimizely dashboard, and each corresponds with a specific variation assignment. If the user's integer value is included in the bucket range, they are added to the experiment and they are assigned a variation.

-

If you use a bucketing ID, the SDK will hash the bucketing ID (instead of the user ID) with the experiment ID and return a variation.

Click to enlarge

Updated 3 days ago