Run A/B tests

How to set up a simple A/B or ON/OFF test in Optimizely Feature Experimentation.

If you are new to experimentation, you can get a lot done with a simple ON or OFF A/B test. This configuration has one flag with two variations:

-

One "flag_on" variation.

-

One "flag_off" variation.

Restrictions

If you intend on having multiple experiments and flag deliveries (targeted deliveries) in your flag, your experiment must always be the first rule in your rulesets for a flag.

Setup overview

To configure a basic A/B test:

-

(Prerequisite) Create a flag.

-

(Prerequisite) Handle user IDs.

-

Create and configure an A/B Test rule in the Optimizely app.

-

Integrate the example

decidecode that the Optimizely Feature Experimentation app generates with your application. -

Test your experiment in a non-production environment. See QA and troubleshoot.

-

Discard any QA user events and enable your experiment in a production environment.

Create an experiment

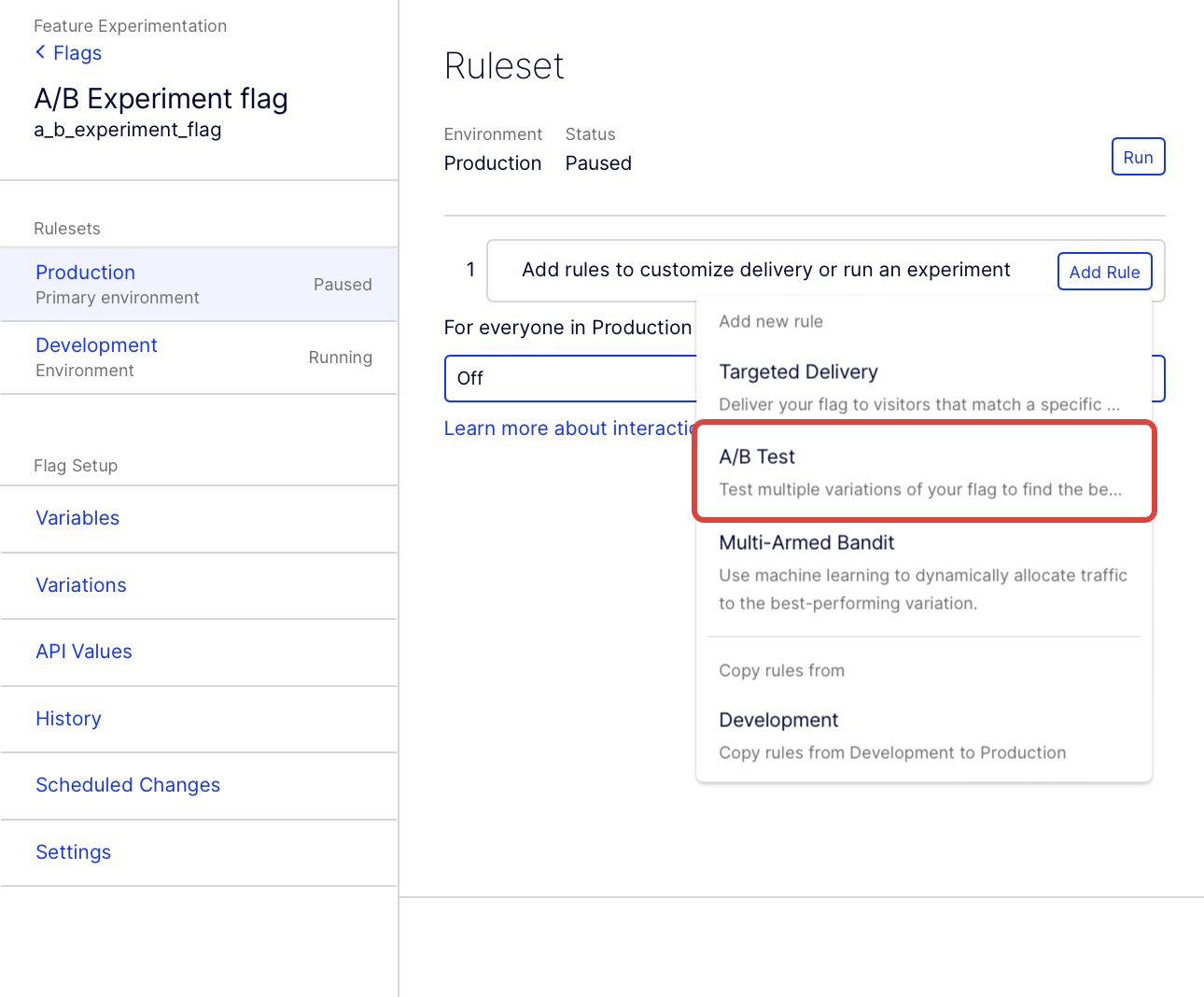

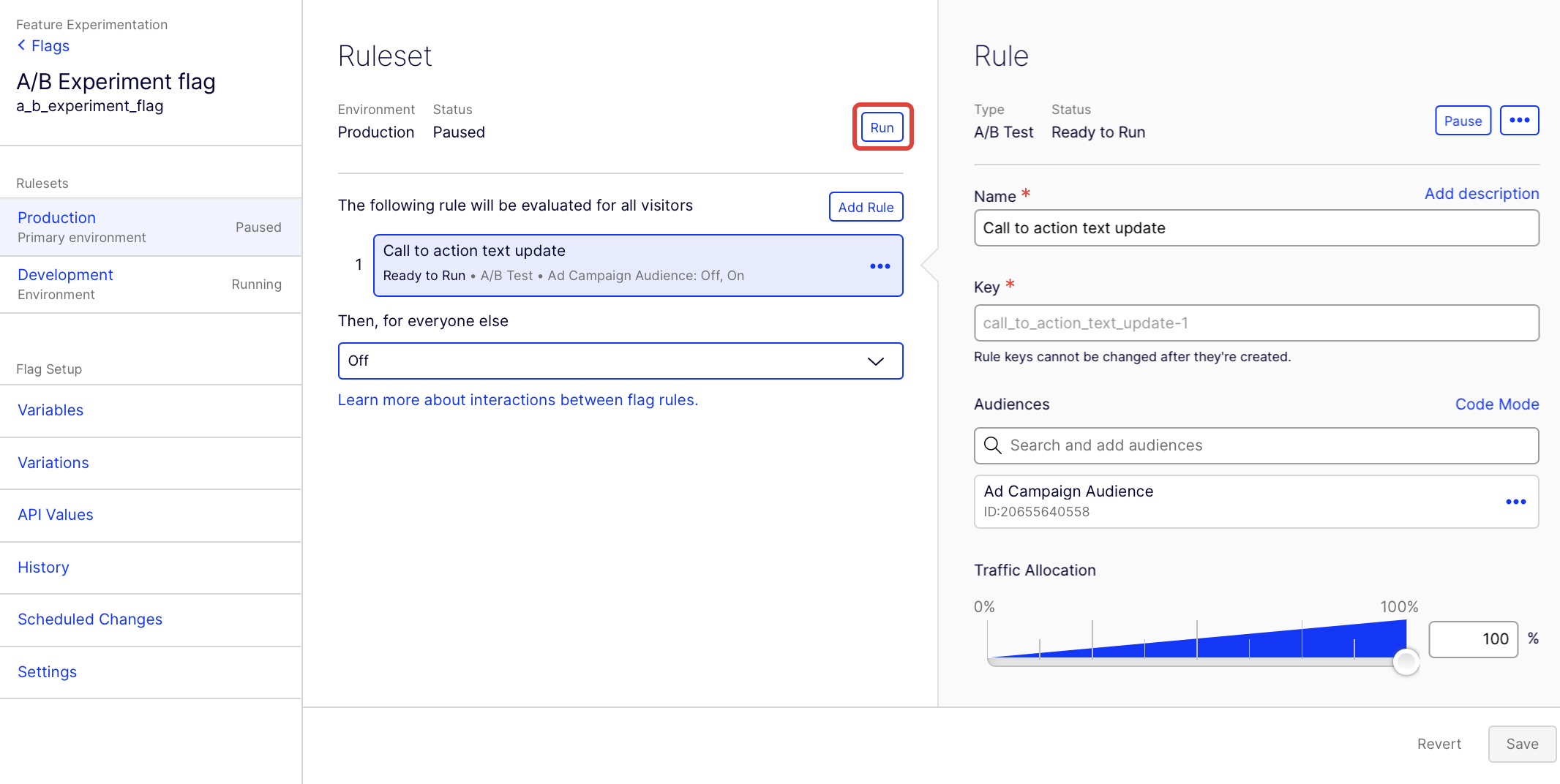

Create A/B test rule

-

Select a flag from the Flags list.

-

Select the environment you want to target.

-

Click Add Rule.

-

Select A/B Test.

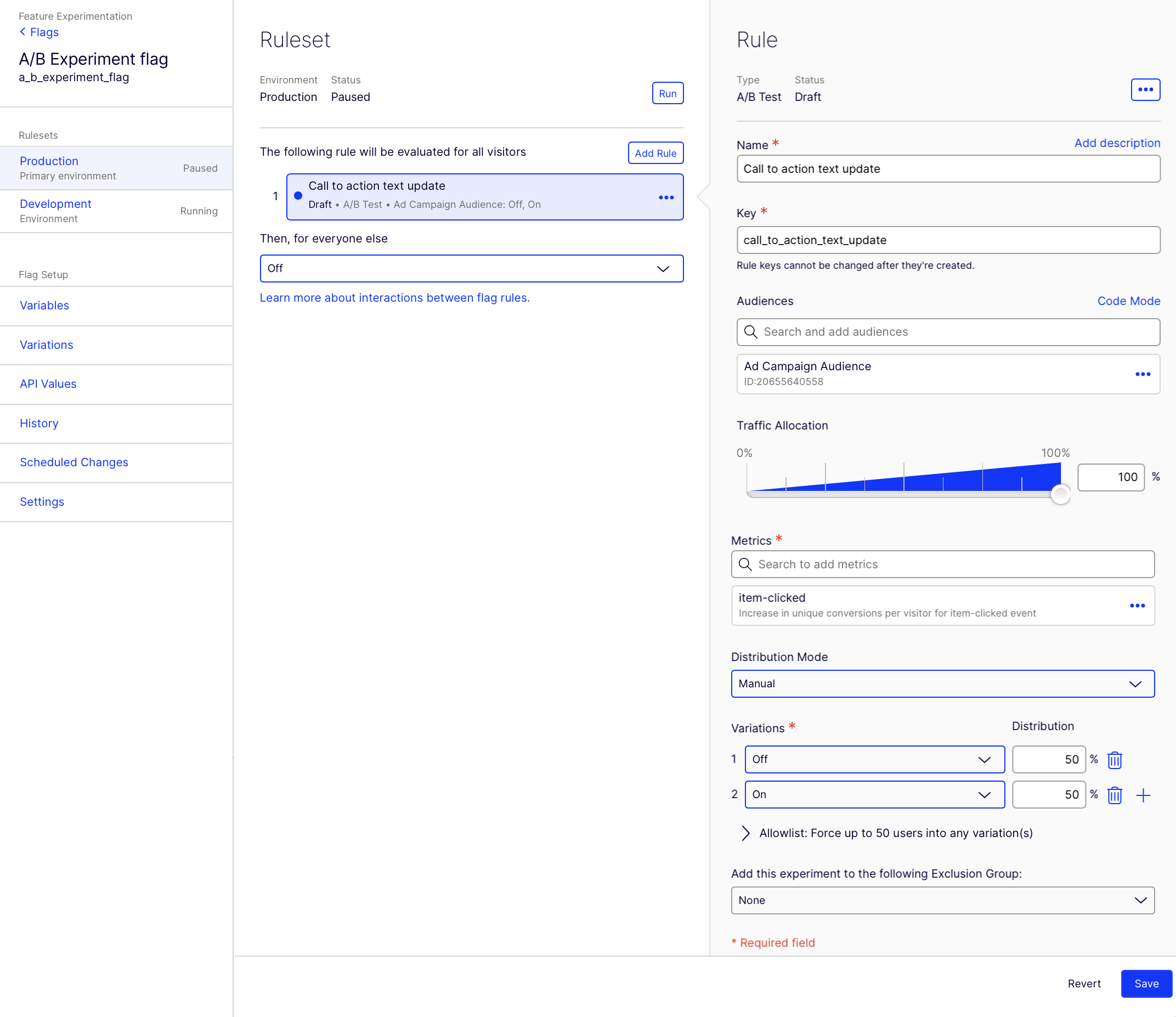

Configure your A/B test rule

- Enter a Name

- The Key is automatically created based on the Name. You can optionally update it.

- (Optional) Click Add description to add a description. You should add your hypothesis for your A/B test rule as the description.

- (Optional) Search for and add audiences. To create an audience, see Target audiences. Audiences evaluate in the order in which you drag and drop them. You can choose whether to match each user on any or all of the audience conditions.

- Set the Traffic Allocation to assign a percentage of your audience to bucket into the experiment.

Note

If you plan to change the traffic's Traffic Allocation after running the experiment or select Stats Accelerator for the Distribution Mode, you will need to implement a user profile service before starting the experiment.

For information, see Ensure consistent user bucketing.

-

Add Metrics based on tracked user events. See Create events to create and track events. For information about selecting metrics, see Choose metrics. For instructions on setting up metrics, see Create a metric in Optimizely using the metric builder.

-

Choose how your audience is distributed using Distribution Mode. Use the drop-down list to select:

- Manual – By default, variations are given equal traffic distribution. Customize this value for your experiment's requirements.

- Stats Accelerator – Stats Accelerator automatically manipulates traffic distribution to minimize time to statistical significance using. For information, see Stats accelerator.

-

Choose the flag variations to compare in the experiment. For a basic experiment, you can include one variation in which your flag is on and one in which your flag is off. For a more advanced A/B/n experiment, create variations with multiple flag variables. No matter how many variations you make, leave one variation with the feature flag off as a control. For information about creating variations, see Create flag variations.

-

(Optional) Click Allowlist: Force up to 50 users into any variation(s) and enter the User ID. See Allowlisting.

-

(Optional) Add the experiment to an Exclusion Group.

-

Click Save.

Implement the experiment using the decide method

Flag is implemented in your code

If you have already implemented the flag using a Decide method, you do not need to take further action (Optimizely Feature Experimentation SDKs are designed so you can reuse the exact flag implementation for different flag rules).

Flag is not implemented in your code

If the flag is not implemented yet, copy the sample flag integration code into your application code and edit it so that your feature code runs or does not run based on the output of the decision received from Optimizely.

Use the Decide method to enable or disable the flag for a user:

// Decide if user sees a feature flag variation

user := optimizely.CreateUserContext("user123", map[string]interface{}{"logged_in": true})

decision := user.Decide("flag_1", nil)

enabled := decision.Enabled

// Decide if user sees a feature flag variation

var user = optimizely.CreateUserContext("user123", new UserAttributes { { "logged_in", true } });

var decision = user.Decide("flag_1");

var enabled = decision.Enabled;

// Decide if user sees a feature flag variation

var user = await flutterSDK.createUserContext(userId: "user123");

var decideResponse = await user.decide("product_sort");

var enabled = decision.enabled;

// Decide if user sees a feature flag variation

OptimizelyUserContext user = optimizely.createUserContext("user123", new HashMap<String, Object>() { { put("logged_in", true); } });

OptimizelyDecision decision = user.decide("flag_1");

Boolean enabled = decision.getEnabled();

// Decide if user sees a feature flag variation

const user = optimizely.createUserContext('user123', { logged_in: true });

const decision = user.decide('flag_1');

const enabled = decision.enabled;

// Decide if user sees a feature flag variation

$user = $optimizely->createUserContext('user123', ['logged_in' => true]);

$decision = $user->decide('flag_1');

$enabled = $decision->getEnabled();

# Decide if user sees a feature flag variation

user = optimizely.create_user_context("user123", {"logged_in": True})

decision = user.decide("flag_1")

enabled = decision.enabled

// Decide if user sees a feature flag variation

var decision = useDecision('flag_1', null, { overrideUserAttributes: { logged_in: true }});

var enabled = decision.enabled;

# Decide if user sees a feature flag variation

user = optimizely_client.create_user_context('user123', {'logged_in' => true})

decision = user.decide('flag_1')

decision.enabled

// Decide if user sees a feature flag variation

let user = optimizely.createUserContext(userId: "user123", attributes: ["logged_in":true])

let decision = user.decide(key: "flag_1")

let enabled = decision.enabled

For more detailed examples of each SDK, see:

- Android SDK example usage

- Go SDK example usage

- C# SDK example usage

- Flutter SDK example usage

- Java SDK example usage

- Javascript (Browser) SDK example usage

- JavaScript (Node) SDK example usage

- PHP SDK example usage

- Python SDK example usage

- React SDK example usage

- React Native SDK example usage

- Ruby SDK example usage

- Swift SDK example usage

Adapt the integration code in your application. Show or hide the flag's functionality for a given user ID based on the boolean value your application receives.

The goal of the Decide method is to separate the process of developing and releasing code from the decision to turn a flag on. The value this method returns is determined by your flag rules. For example, the method returns false if the current user is assigned to a control or "off" variation in an experiment.

Remember, a user evaluates each flag rule in an ordered ruleset before being bucketed into a given rule variation or not. See Interactions between flag rules for information.

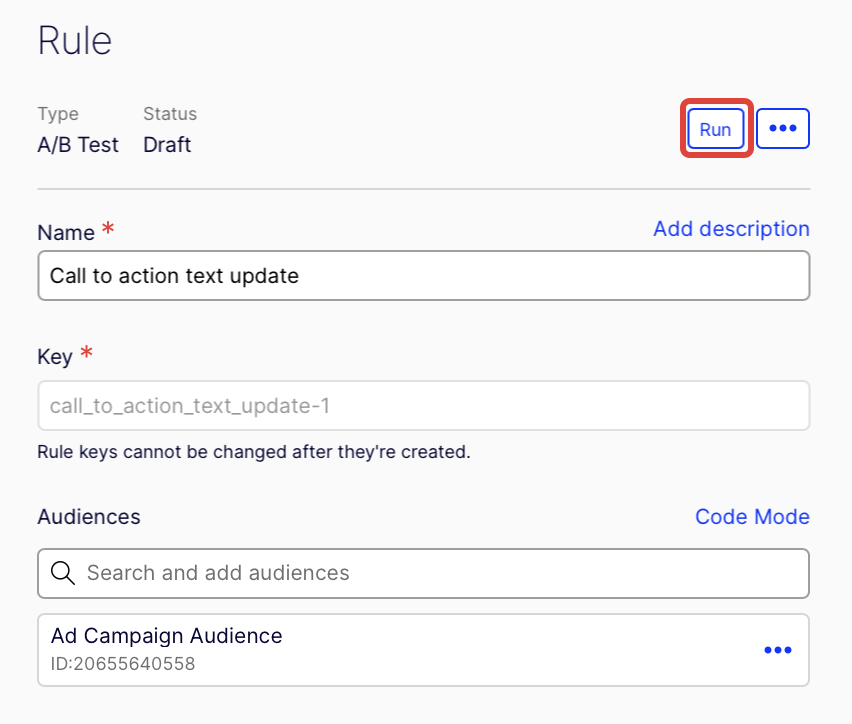

Start your rule and flag (ruleset)

Note

For information between the old and new flag management UI in Optimizely Feature Experimentation, see New flag and rule lifecycle management FAQs.

After creating your A/B test and implementing the experiment using the decide method, you can start your test.

-

Set your rule as Ready to run. Click Run on your A/B test rule.

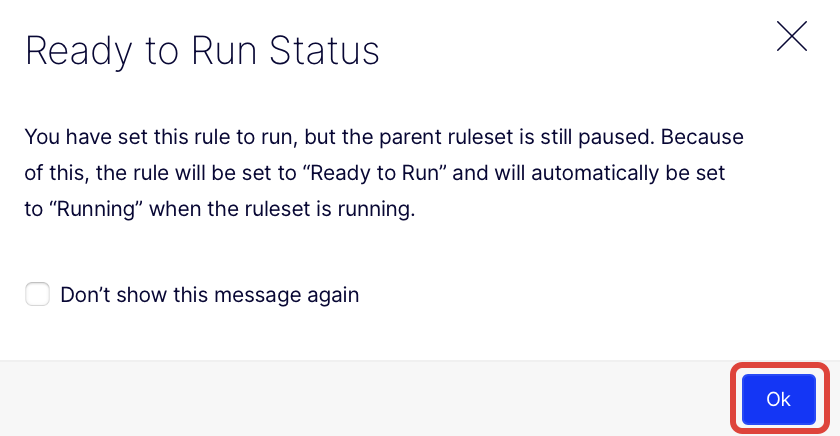

-

Click Ok on the Ready to Run Status page. This alerts you that your rule is set to Ready to run until you start your ruleset (flag).

-

Click Run to start your flag and rule.

Congrats! Your A/B test is running.

Test with flag variables

When you have run a basic "on/off" A/B test, you can increase the power of your experiments by adding remote feature configurations or flag variables.

Flag variations enable you to avoid hard-coding variables in your application. Instead of updating the variables by deploying, you can edit them remotely in the Optimizely Feature Experimentation app. For information about flag variations, see flag variations.

To set up an A/B test with multiple variations:

- Create and configure a basic A/B test. See previous steps.

- Create flag variations containing multiple variables.

- Integrate the example code with your application.

Updated 7 months ago