Run Contextual Multi-Armed Bandit optimizations

How to run a Contextual Multi-Armed Bandit optimizations in Optimizely Feature Experimentation.

BetaThis feature is in beta. Apply on the Optimizely beta signup page or contact your Customer Success Manager.

Contextual Multi-Armed Bandits (CMAB) are a machine learning strategy used to personalize user experiences by dynamically selecting the best-performing variation based on user-specific attributes. Unlike traditional multi-armed bandits (MABs), which aim to identify a single optimal variation for all users, CMAB, powered by Opal, Optimizely’s embedded AI, tailors decisions to each user's context, such as device type, location, or behavioral history, maximizing performance on a defined metric.

The problems that CMAB addresses include the following:

- Personalized experiments are resource-intensive – They can deliver higher uplift but are slow to run, especially for small segments.

- Traditional A/B testing is time-consuming – Teams often abandon tests due to the effort required.

- Manual personalization does not scale – Organizations need automated, scalable personalization to reduce setup and maintenance.

CMAB uses reinforcement learning to optimize which variation Feature Experimentation shows to each user, based on their attributes and past responses. The model balances exploration (testing new variations) and exploitation (using the best-known variation) to maximize uplift. Over time, the system can achieve one-to-one personalization as it learns from more data.

NoteOptimizely does not support republishing CMABs that you have archived.

Configuration overview

To configure a CMAB, complete the following:

-

(Prerequisite) Create a flag in your Feature Experimentation project.

-

(Prerequisite) Handle user IDs.

NoteYou should configure a user profile service to ensure consistent user bucketing if you are using a server-side SDK.

- Define User Attributes. These are the characteristics you provide to CMAB for personalization.

- Create and configure a CMAB rule.

- If you have not done so yet, implement the Optimizely Feature Experimentation SDK's Decide method in your application's codebase through a flag.

- Test your CMAB rule in a development environment. See Test and troubleshoot.

Configure a CMAB

-

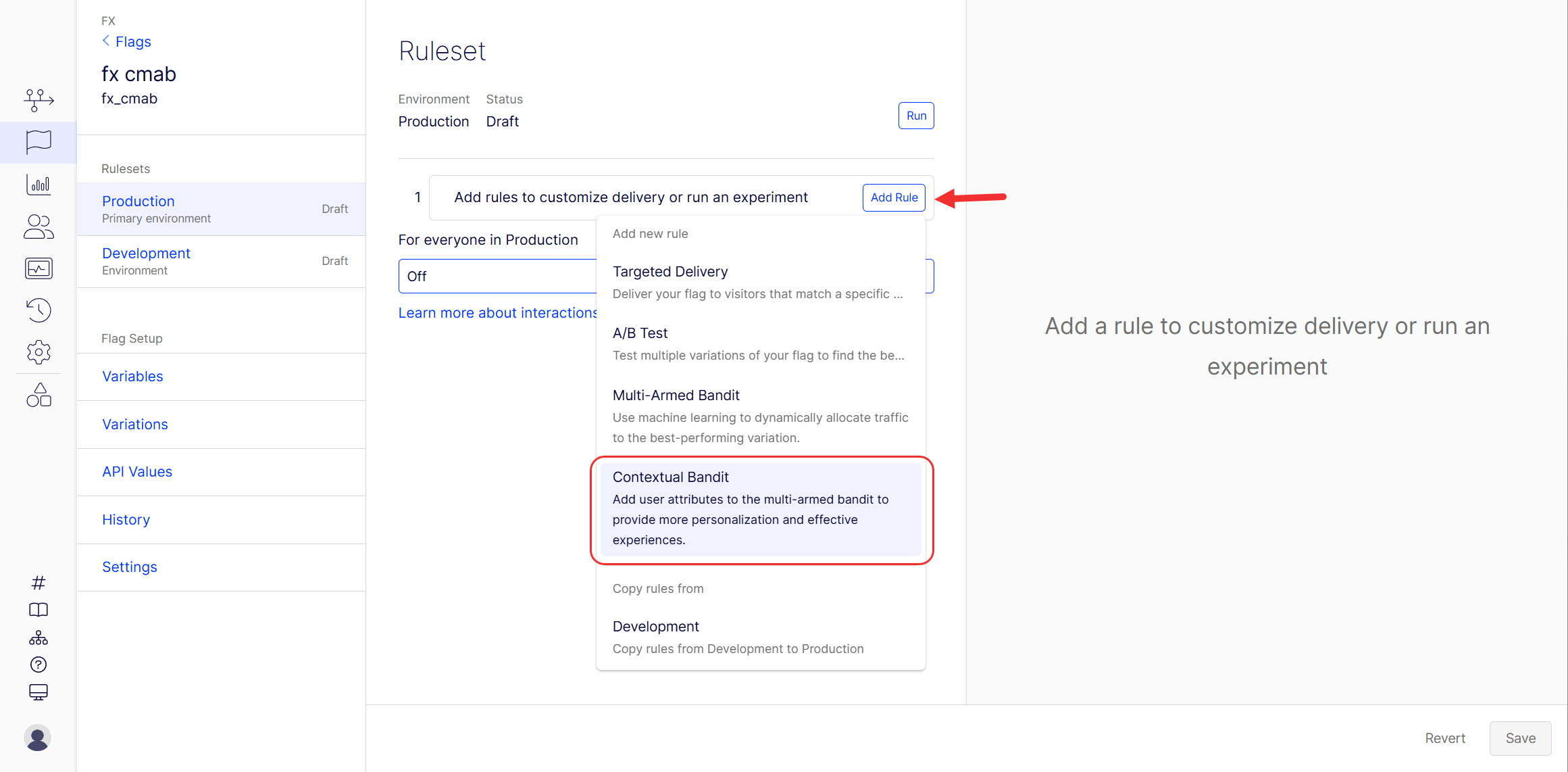

Go to Flags, select your flag, and select your environment.

-

Click Add Rule and select Contextual Bandit.

-

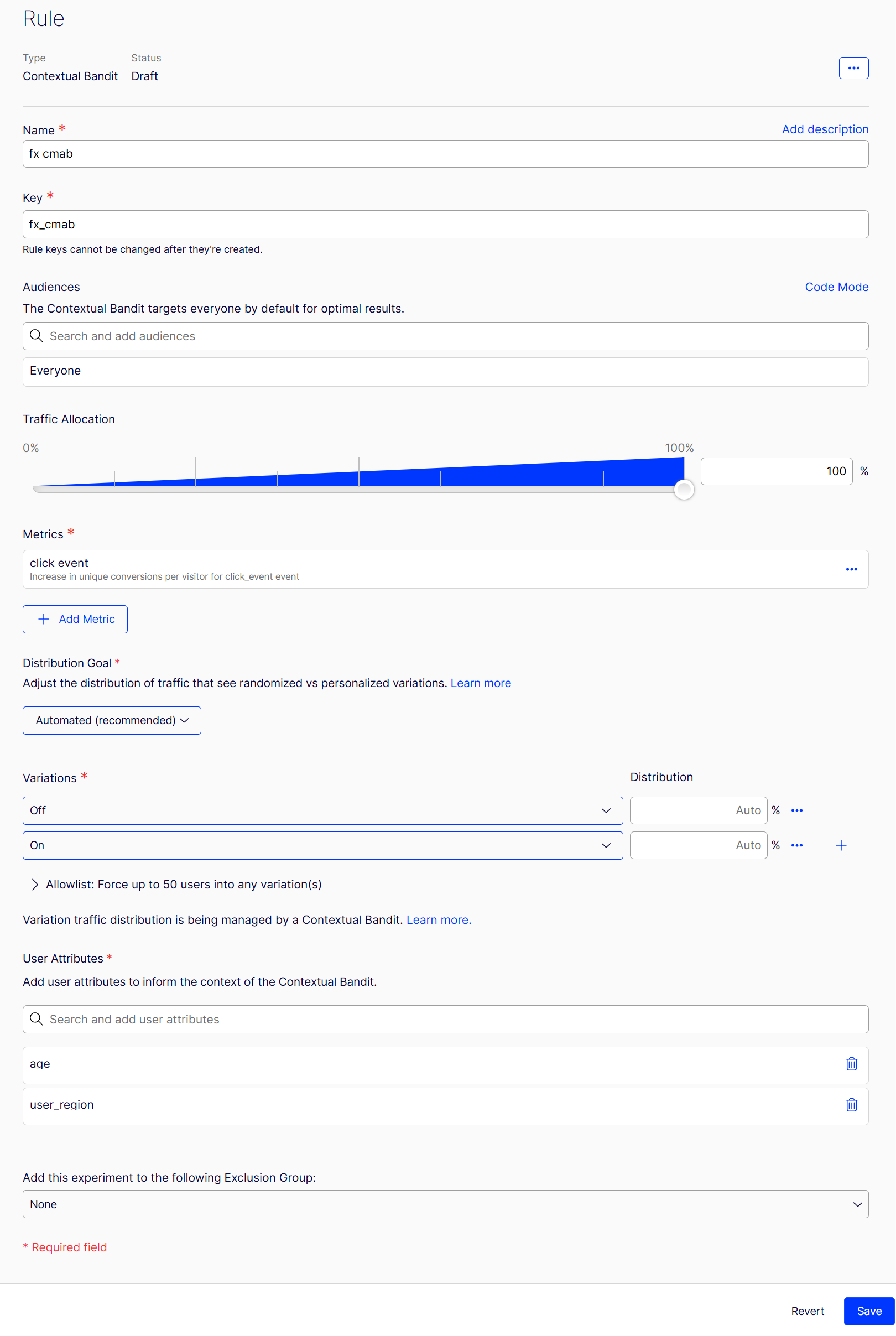

Enter a Name for the rule.

-

The Key is automatically created based on the Name. You can optionally update it.

-

(Optional) Search for and add audiences. To create an audience, see Target audiences. You should keep audiences broad for effective model learning and personalization.

-

Set the Traffic allocation slider to set the percentage of your total traffic to bucket into the experiment.

-

Add metrics based on tracked user events.

- For information on creating and tracking events, see Create events.

- For information about selecting metrics, see Choose metrics.

- For instructions on configuring metrics, see Create a metric in Optimizely using the metric builder.

NoteCMAB optimizes exclusively for the primary metric. However, you can include secondary or monitoring metrics for informational purposes, even though they do not influence the optimization process.

-

Select a Distribution Goal. The distribution goal determines how the model allocates which variation each visitor sees. You can select from one of the following modes:

- Automated – The machine learning model decides this dynamically, learning from historical data to optimize for each visitor's attributes and past responses. The model balances exploration (showing random variations to learn more about what works) and exploitation (using the best-known variation based on collected data).

- Manual – Adjust a slider to control this balance: more exploration means more random testing, while more exploitation means relying on the model’s current best guess for each visitor. The goal is to maximize uplift and personalization by continuously learning and adapting, you should keep the setting automated, while more advanced users can fine-tune the exploration and exploitation ratio if desired.

- Maximise Personalization – The slider automatically sets the exploration rate to 10% and the exploitation rate to 90%. This lets model to occasionally test new variations while ensuring most users receive the variation predicted to perform best based on their attributes and past interactions.

-

Choose the Variations you want to optimize. Unlike A/B experiments, you do not need to compare to a baseline experiment because Feature Experimentation does not calculate statistical significance with CMAB optimizations. The CMAB manages the the variation traffic distribution.

-

Specify User Attributes for optimization. User attributes are the specific characteristics you provide to the model for personalization, such as age, location, or interests. The model uses these attributes to identify cohorts and optimize which variation to display to each user, learning over time from historical data about how different groups respond. As the model gathers more data, it can segment users into increasingly narrow groups, aiming for highly personalized experiences. To create attributes, see Define user attributes to build audiences.

-

(Optional) Add the CMAB to an Exclusion Group.

-

Click Save.

Configure CMAB in the SDK

See the following docs for information on how to configure CMAB settings in the SDKs and agent, including caching behavior and prediction endpoint customization.

Key features

- AI-enhanced personalization – Use machine learning to understand user data and super-personalize the user experience, making experiences more meaningful and effective.

- Increased engagement and conversion – Make individual experiences more relevant, boost engagement rates, and drive higher conversions.

- Operational efficiency – Reduce the time and resources needed to analyze data and make decisions. This enables more efficient and faster operations.

- Data-driven decision making – Minimize guesswork and enable a more strategic approach to marketing and experimentation.

- Results page – A dedicated results page displays performance metrics, similar to existing reporting for Optimizely Web Experimentation and Personalization campaigns. The results page is currently in development.

Why CMABs do not show statistical significance

Traditional A/B testing aims to identify if a variation performs better or worse than the control, expressed through statistical significance. This helps you see if a change has the desired effect, letting you make iterative improvements. Fixed traffic allocation strategies can expedite the process of achieving statistical significance.

In contrast, Optimizely Experimentation's algorithms use a dynamic balance of exploration and exploitation to optimize user experiences. Exploration involves random testing to gather data, while exploitation uses the model's best guess to enhance personalization and uplift. You can adjust this balance using a slider. This approach focuses on maximizing overall performance by continuously learning and adapting, rather than solely aiming for statistical significance.

Implement the experiment using the decide method

Flag is implemented in your code

If you have already implemented the flag using a Decide method, you do not need to take further action. Optimizely Feature Experimentation SDKs are designed so you can reuse the same flag implementation for different flag rules.

Flag is not implemented in your code

If you have not implemented the flag yet, copy the sample flag integration code into your application code and edit it so that your feature code runs or does not run based on the output of the decision received from Optimizely.

Use the following example Decide method code to enable or disable the flag for a user.

// Decide if user sees a feature flag variation

user := optimizely.CreateUserContext("user123", map[string]interface{}{"logged_in": true})

decision := user.Decide("flag_1", nil)

enabled := decision.Enabled// Decide if user sees a feature flag variation

var user = optimizely.CreateUserContext("user123", new UserAttributes { { "logged_in", true } });

var decision = user.Decide("flag_1");

var enabled = decision.Enabled;// Decide if user sees a feature flag variation

var user = await flutterSDK.createUserContext(userId: "user123");

var decideResponse = await user.decide("product_sort");

var enabled = decision.enabled;// Decide if user sees a feature flag variation

OptimizelyUserContext user = optimizely.createUserContext("user123", new HashMap<String, Object>() { { put("logged_in", true); } });

OptimizelyDecision decision = user.decide("flag_1");

Boolean enabled = decision.getEnabled();// Decide if user sees a feature flag variation

const user = optimizely.createUserContext('user123', { logged_in: true });

const decision = user.decide('flag_1');

const enabled = decision.enabled;// Decide if user sees a feature flag variation

$user = $optimizely->createUserContext('user123', ['logged_in' => true]);

$decision = $user->decide('flag_1');

$enabled = $decision->getEnabled();# Decide if user sees a feature flag variation

user = optimizely.create_user_context("user123", {"logged_in": True})

decision = user.decide("flag_1")

enabled = decision.enabled// Decide if user sees a feature flag variation

var decision = useDecision('flag_1', null, { overrideUserAttributes: { logged_in: true }});

var enabled = decision.enabled;# Decide if user sees a feature flag variation

user = optimizely_client.create_user_context('user123', {'logged_in' => true})

decision = user.decide('flag_1')

decision.enabled// Decide if user sees a feature flag variation

let user = optimizely.createUserContext(userId: "user123", attributes: ["logged_in":true])

let decision = user.decide(key: "flag_1")

let enabled = decision.enabledFor more detailed examples of each SDK, see:

- Android SDK example usage

- Go SDK example usage

- C# SDK example usage

- Flutter SDK example usage

- Java SDK example usage

- JavaScript SDK example usage – SDK versions 6.0.0 and later.

- Javascript (Browser) SDK example usage – SDK versions 5.3.5 and earlier.

- JavaScript (Node) SDK example usage – SDK versions 5.3.5 and earlier.

- PHP SDK example usage

- Python SDK example usage

- React SDK example usage

- React Native SDK example usage

- Ruby SDK example usage

- Swift SDK example usage

Adapt the integration code in your application. Show or hide the flag's functionality for a given user ID based on the boolean value your application receives.

The goal of the Decide method is to separate the process of developing and releasing code from the decision to turn a flag on. The value this method returns is determined by your flag rules. For example, the method returns false if the current user is assigned to a control or "off" variation in an experiment.

NoteA user is evaluated for each rule in flag's ruleset in order before being bucketed into a given rule variation or not.

See Interactions between flag rules for information.

Updated 2 days ago