Global holdouts

How to apply global holdouts in Optimizely Feature Experimentation.

BetaThis feature is in beta. Apply on the Optimizely beta signup page or contact your Customer Success Manager.

Global holdouts help you accurately measure the true impact of your A/B testing and experimentation efforts. You designate a small percentage of users as a control group, ensuring they do not experience any experiments or new feature rollouts. This lets you directly compare outcomes between users who experience variations identified as winners during A/B tests and those who only see the default "off" variation for feature flags in your project. This approach lets you quantify the cumulative impact of your testing program, answer critical questions from leadership about the value generated, and make informed decisions about future product and marketing strategies.

A/B testing optimizes products and enhances user experiences, but proving its value can be challenging without a clear measurement of the overall uplift generated. Quantifying its revenue impact remains difficult, as measuring contributions over a quarter or year often requires manual calculations and complex workarounds.

Global holdouts solve this problem by letting organizations set aside a small percentage of their user traffic—typically up to 5%—as a control group. These users are not exposed to any A/B tests or feature rollouts, ensuring a clean baseline for comparison. The holdout group only experiences the default product experience as configured in the "off" variation of your feature flags.

How Global holdouts work

Configuration – Create a holdout in the platform, select the environment, define the primary metric to track, and choose the percentage of traffic to hold back. The system warns you if the holdout exceeds 5% of total traffic. The more visitors you withhold from experiments, the time needed to reach statistical significance increases.

Assignment – Visitors in the holdout group receive the default “off” variation for feature flags, regardless of ongoing A/B tests, targeted deliveries, or multi-armed bandits running on top of your flags.

Visibility – View the holdout and its results in a dedicated dashboard, letting your team monitor and analyze performance.

Results calculation – After experiments conclude and the winning variation for the experiment is manually identified in the application, the platform aggregates data from visitors who saw winning variations to compare against the holdout group. This lets you compare key metrics, such as revenue or engagement, to quantify the uplift generated by experimentation. See how to manually deploy a winning variation.

Create a holdout

-

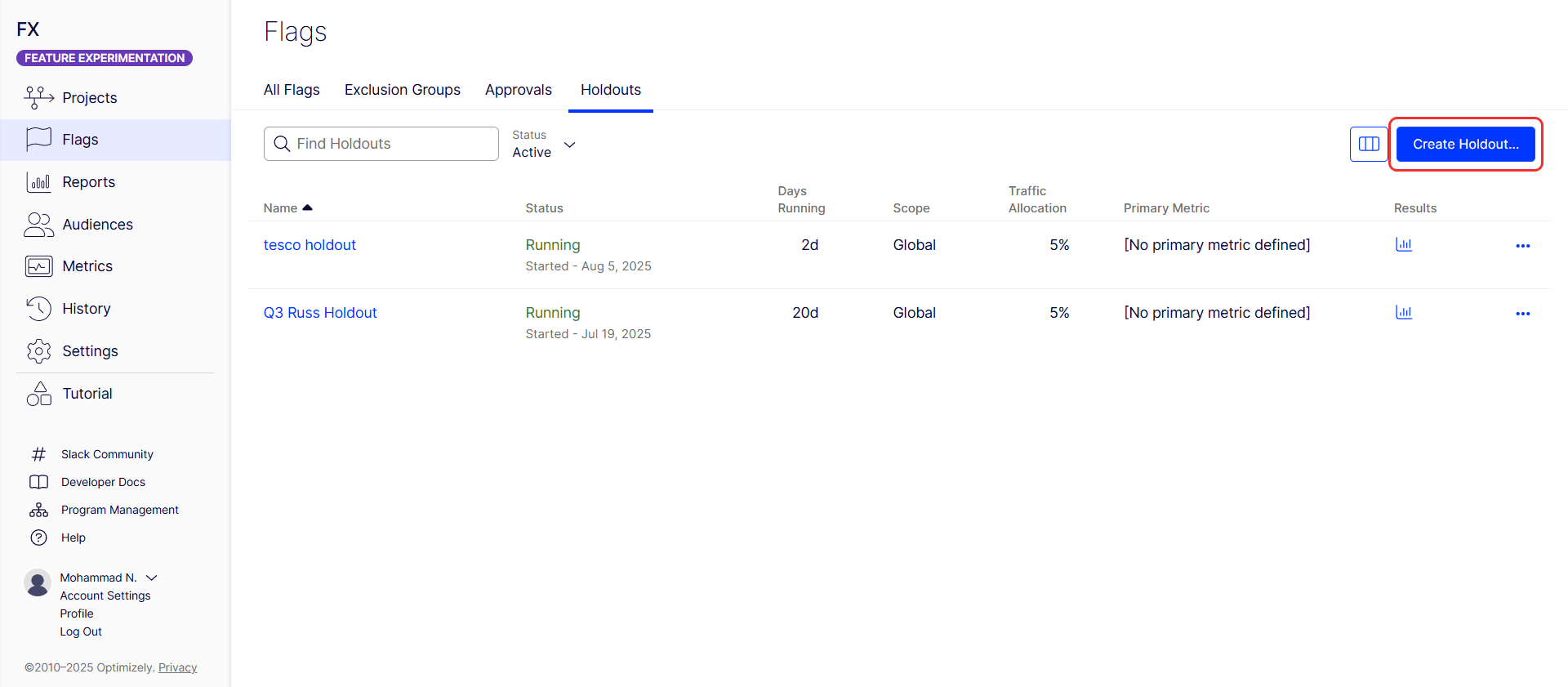

Go to Flags > Holdouts.

-

Click Create Holdout.

-

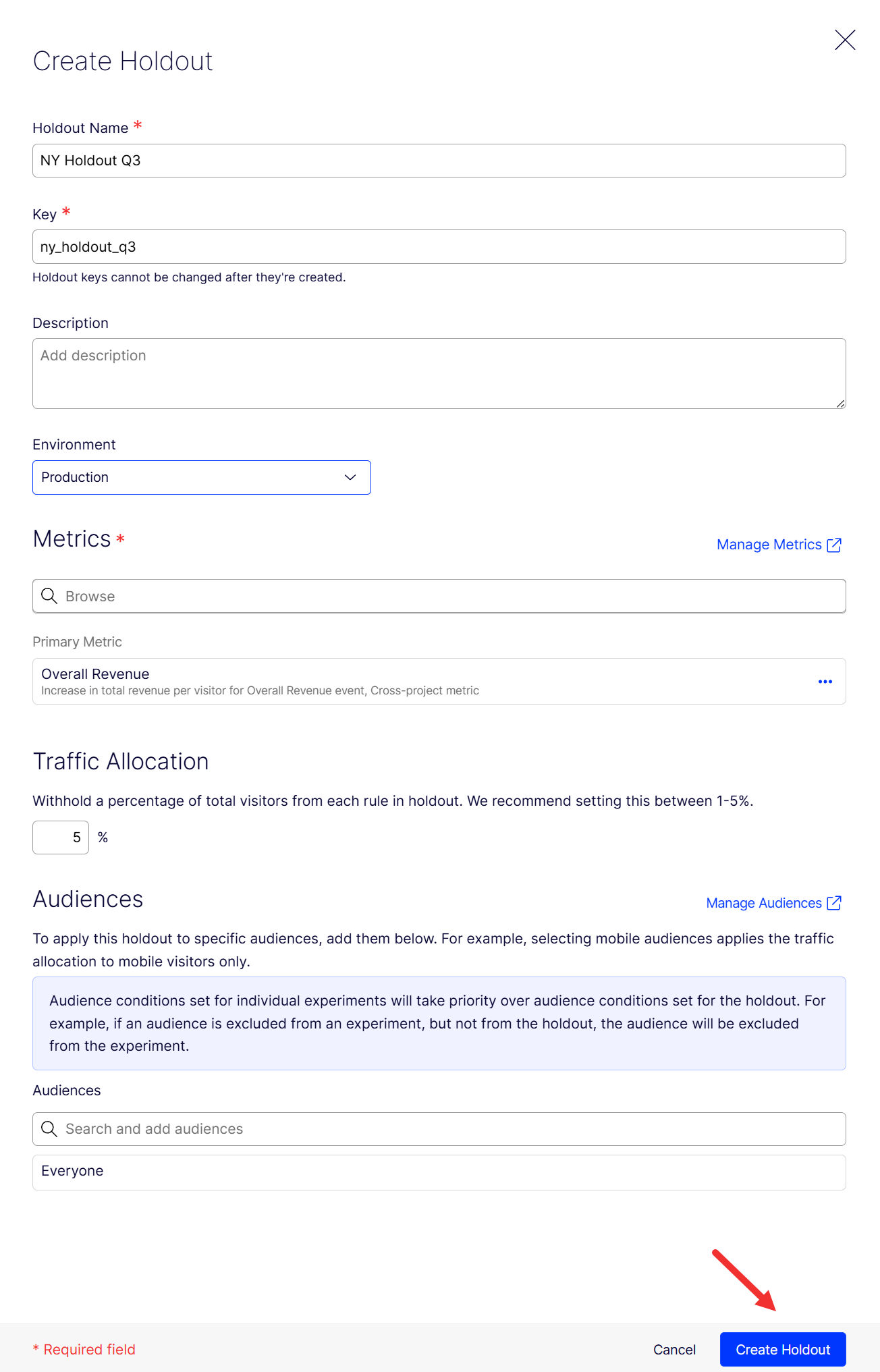

Enter a unique name in the Name field.

-

Edit the Holdout Key and optionally add a Description. Valid keys contain alphanumeric characters, hyphens, and underscores, are limited to 64 characters, and cannot contain spaces. You cannot modify the key after you create the holdout.

-

Select the Environment to scope the holdout. Like A/B tests, holdout results are separated by environment.

-

Choose the primary Metric. This is usually the main company metric to measure results. Optionally, you can choose secondary and monitoring metrics.

-

Set the percentage of traffic to be held back by Feature Experimentation from tests and new features (recommended not to exceed 5%). The system warns you if the holdout percentage exceeds 5%.

-

(Optional) Target a specific audience, although you should apply the holdout to everyone.

-

Click Create Holdout.

Start a holdout

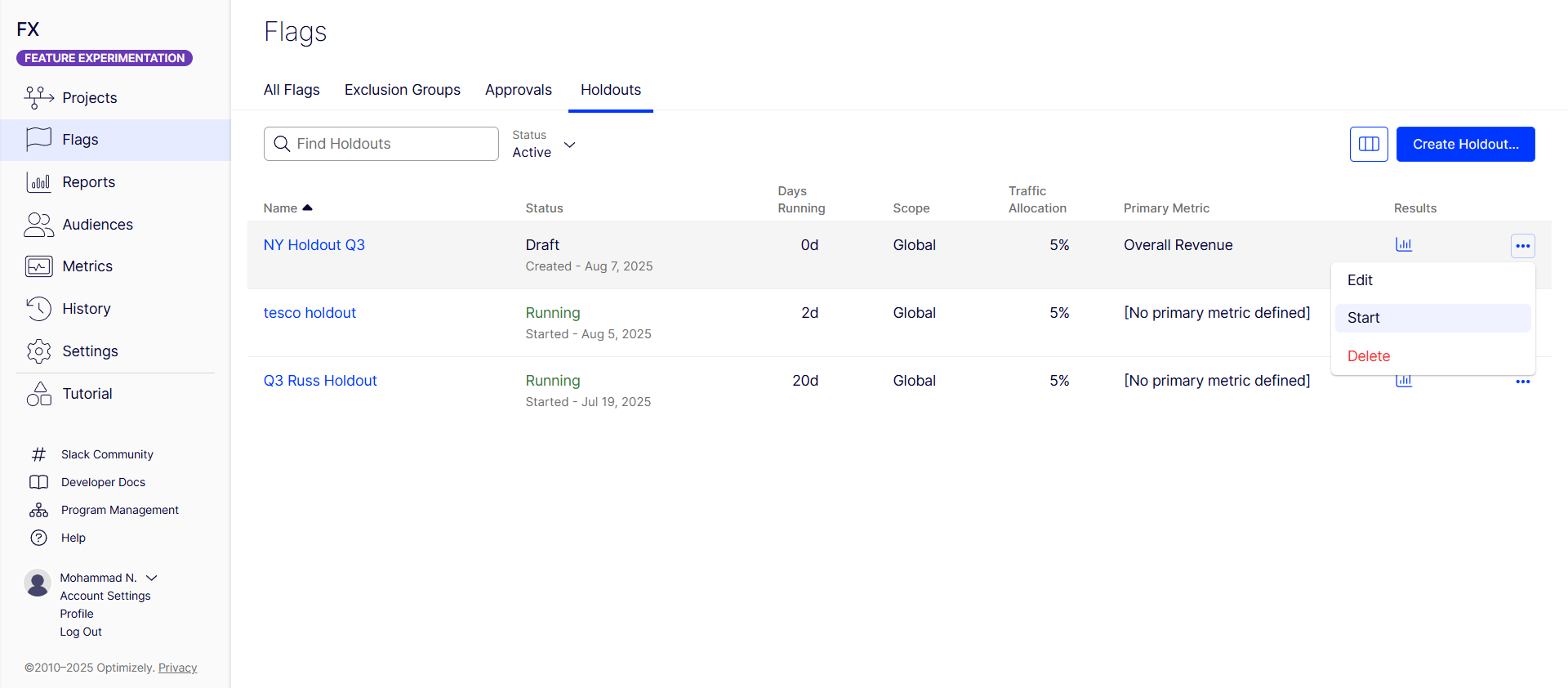

After creating the holdout, it displays in the Holdouts tab, where you can start it.

Click More options (...) for the holdout and click Start.

Visitors assigned to this holdout only see the default "off" variation for any flags within the holdout, regardless of any experiments they might otherwise be included in by Feature Experimentation. When you start a holdout, you cannot pause it, only permanently conclude it.

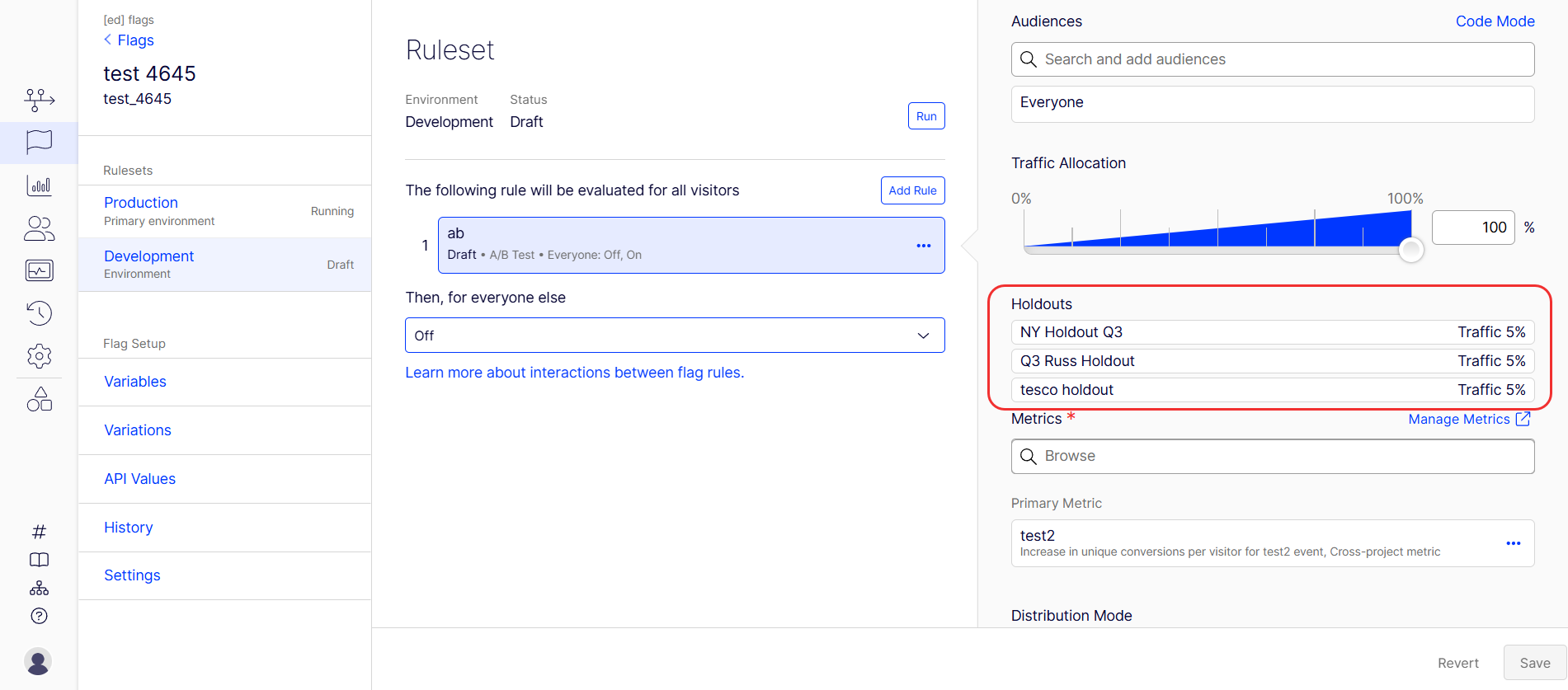

To see holdouts that Feature Experimentation applied to a rule, go to Flags > Environment > Rule. The holdouts applied, along with the traffic allocated, display.

Results calculation

After you conclude A/B tests and identify winning variations, data from these variations is aggregated and compared to the holdout group's data.

The comparison provides a clear measurement of uplift and the cumulative impact of experimentation, similar to a large-scale A/B test.

Learn how to conclude your A/B test and deploy the winning variation.

WarningYou must conclude your A/B test and deploy the winning variation to reflect your experiment results in the holdout analysis accurately. This step confirms the variation is part of the exposed group and includes its performance in the holdout results.

Conclusion

Global holdouts help you measure the success of your experimentation programs by maintaining a persistent control group and providing transparent, actionable results. They empower you to maximize the value of your A/B testing efforts and make data-driven decisions for growth. Additionally, they offer a robust methodology for long-term measurement of product changes and feature rollouts, letting you quantify the value of experimentation and justify investment in A/B testing.

Updated 6 days ago