DXP and SEO

Describes search engine optimization in Optimizely.

SEO (Search Engine Optimization) is more than just ranking your website high in search engines such as Google or Bing. It is the art of understanding your website visitors and optimizing your site to the needs of those visitors, including things like site speed, image optimization, and well-structured navigation. If you succeed, your website will automatically rank higher on search engines such as Google.

SEO is an enormous area that employs many people, and its details are not in the scope of this developer guide. However, this topic contains a checklist with the most important things you, as a developer, can do in Optimizely to improve your site's attractiveness and ranking in search engines. For SEO tips oriented toward the editors, see the Optimizely User Guide.

Customize your templates

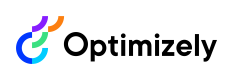

One of the developers' most important site optimization tasks is setting up the site templates to let the editors consistently configure SEO metadata on pages, such as titles, keywords, and page descriptions. This is an example from the Alloy sample site, where SEO-related properties are gathered on an SEO tab:

Metadata

The following metadata tags can be useful to have:

- Title – The title is important for the Search Engine Results Page (SERP).

- Description – The description is also important for the SERP. Google does not use this tag for page ranking, but it is important for the click-through rate from the search results.

- No indexing – This stops robots from indexing pages that should not be indexed.

- No follow – Used by robots. Rel attributes can be assigned the value nofollow directly on links, which means that search engines will not consider the link when indexing your site. The link will not affect the target page's SEO ranking. It is also possible to add the nofollow directive on the robot's meta-tags or X-Robots-Tag HTTP header; this will stop search engines from following links on the page.

You can also extend the metadata with:

-

Open Graph tags – The Open Graph meta tags are code snippets that control how URLs are displayed when shared on social media platforms like Facebook, LinkedIn, and Twitter. If you do not use Open Graph tags, there is a possibility that incorrect images or descriptions are displayed when someone shares one of your URLs. Read more on The Open Graph protocol.

-

Schema.org information – Schema.org is a semantic vocabulary of tags you can add to your HTML code to improve how search engines read and represent your page in the search results. One way to use the schema markup is to create "rich snippets." The rich snippets enhance the descriptions in the search results by displaying, for example, contact information, event information, product ratings, or videos. The rich snippets do not directly affect Google's ranking of your site, but they can entice more visitors to click your links in the search results.

This page uses schema markup for recipe ratings.

Schema.org differs from OpenGraph in purpose: OpenGraph is for the relation and typing of content, while schema.org is for the structured representation of content. Schema.org information (as JSON+LD or data-attributes) is what drives the internal site search box on Google, and accurate breadcrumbs.

Read more on Schema.org Markup.

-

Image' alt' attributes – The main purpose of the alt attributes on images is web accessibility. Screen readers use the alt tags to help visually impaired site visitors better understand the images used on the site. They are also displayed on the website if the actual image cannot be displayed. Search engines also use alt attributes when crawling a site. The search engines cannot see the image. Instead, they use the alt attribute to understand better the image and how it should be indexed. The TinyMCE editors can add image alt attributes.

-

Alternative language URLs – If you have multiple page versions for different languages or regions, you can use href link headers to tell Google which version is the most appropriate for each language or region. Add alternative language URLs to the head, for example, with the

hreflangattribute in the head element. See Tell Google about localized versions of your page.

Google PageSpeed Insights is a good tool for testing.

Responsive design

Search engines, such as Google, favor websites that have a responsive design and are optimized for mobile phones and tablets; it is important to have a mobile-first approach to the design of your website. (Assuming that your site is not one of the few only accessed from desktops.) Responsive design sites also load quickly, which is another reason for using them.

For responsive designs, you can use the "Viewport Meta Element." Using this element, you can control the layout of web pages on mobile browsers. It is included in the head section of your web page. Syntax:

<meta name=”viewport” content=”width=device-width,initial-scale=1″>Optimize website loading times

Another important aspect of good SEO is optimizing the site loading times. A faster website is rewarded in SEO rankings compared to a slower site. Some things to take into consideration are:

- Use a highly available redundant network with auto scale-up and scale-out if possible.

- Use a CDN cache to fast-load site assets. Optimizely DXP uses Cloudflare's CDN cache for this.

- Use responsive design and image size optimizations to decrease loading times.

Ideally, you want to serve your visitors top-notch images in high resolution. Still, unfortunately, high-resolution images can increase your website's loading time, especially for users on smaller devices, which negatively affects their user experience and decreases your SEO ranking. Set up your site to use different preconfigured image sizes so that the visitor is served whatever image quality is appropriate for their screen size while getting a fast loading time. Automate this so your editors do not spend time thinking of image sizes.

One way of achieving different image sizes easily on an Optimizely website is by using the ImageResizer add-on, which lets you define a srcset in the image tags for breakpoint optimization.

<img class="img-fluid"

src="/globalassets/pictures/image.jpg?w=800"

srcset="/globalassets/pictures/image.jpg?w=640 640w,

/globalassets/pictures/image.jpg?w=750 750w,

/globalassets/pictures/image.jpg?w=800 800w,

/globalassets/pictures/image.jpg?w=1080 1080w,

/globalassets/pictures/image.jpg?w=1440 1440w,

/globalassets/pictures/image.jpg?w=1600 1600w,

/globalassets/pictures/image.jpg?w=2400 2400w,"

sizes="(min-width: 800px) 800px, 100vw"

alt="Image description">Another way to optimize your image handling is using the free-for-use ImageProcessor add-on. See this blog post: Episerver and ImageProcessor: more choice for developers and designers.

Caching of images

Images, just like other assets on your website, need to be cached to give good performance. Generally, the expiry timeout on assets can be longer than on content, as these are probably not updated as often. It is important to balance short cache times when the cached content expires too quickly and the performance might be affected, and long cache times when the cached content is not updated so site visitors are served old content.

To avoid this problem where visitors are served outdated content from their local cache, you can add a version number or file hash to the URL and set cache lifetime to a minimum of a month, known as cache busting. See How To Easily Add Cache Busting On Your Optimizely Website by Jon D Jones for information.

URLs

A URL is a human-readable text that replaces the IP addresses computers use to communicate with servers. The URLs also tell users and search engines about the file structure on the website.

There are some benefits to making sure you are using good SEO-optimized URLs. A good URL improves the user experience, and the search engines and the visitors reward a good user experience. A clear and concise URL increases the click-through rate.

Things to consider when optimizing URLs:

- Keep them short. Avoid unnecessary words in the URLs while still making sense.

- Ensure the URLs contain your keywords (that is, the terms people use when trying to find your content).

- Use absolute links and not relative links, if possible.

- Use simple addresses for campaigns.

- Try to avoid URL dynamic parameters. (If you must use them, ask Google to disregard the URL parameters when indexing the site.)

- Do not allow special characters in your links (like &%*<> or blank spaces). These characters make the URL less legible, and search engines may be unable to crawl them.

- Use only lowercase, as search engines might see example.com/products and example.com/Products as two different pages.

- Make sure your links are valid. See the link validation scheduled job.

- Ensure obsolete content is removed or expired, not confusing search engines.

- For duplicate content you do not want to remove, create a 301 redirect.

- You should use a canonical tag if two URLs serve similar content.

The Canonical tag is an HTML link tag withrel=canonicalattribute, and by using this attribute in the HTML code, you tell search engines that this URL is the main page and that they should not index the other duplicated pages.

For more technical information on rewriting URLs to a more friendly format, see Routing in the Content Cloud Developer Guide.

Sitemaps and robots files

Sitemaps and robots.txt are two types of text files placed in the website's root folder that ensure that proper content is indexed and duplicated content is excluded.

Sitemaps

A sitemap is an XML file following a defined format for sitemaps. The sitemap lists the URLs on your site and their internal priority and is used by search engines to crawl and index your site correctly. The sitemap does not affect your site's ranking in search engines, but a properly organized sitemap can indirectly influence your site's ranking. This is especially true for websites containing many pages in a deep structure below the start page.

The sitemap should not be a static file but dynamically generated, preferably at least daily. To automate this, see the add-ons found under SEO automation add-ons: Sitemaps and robots files.

Robots.txt

The robots.txt text file is at the root of your domain. The search engines check this file for instructions on how to crawl your site. It is recommended to have a robots.txt file, even if it is empty because most search engines will look for it, and if you do not have one, search engines can crawl and index your entire site.

Crawling and indexing are not the same; URLs reached through internal or external links may be indexed even though they are not crawled. Crawling is when search engines look through each URL's content. Indexing is when the search engines store and organize the information they find when crawling the internet.

You should not use robots.txt to stop indexing, as search engines and malicious bots, such as harvesting bots, may disregard robots.txt.

To stop indexing, you should instead consider:

- Meta noindex; see Google's: Robots meta tag, data-nosnippet, and X-Robots-Tag specifications.

- X-Robots-Tag HTTP header; see Google's: Robots meta tag, data-nosnippet, and X-Robots-Tag specifications.

- Protect sensitive information behind password login.

Automatic landing pages

An "Automatic landing page" is content (for example, a page type instance) that renders differently depending on user input. It is a form of a personalized content type. A Google hit can, for example, point to an automatic landing page, which shows content related to the Google search.

Optimizely Content Recommendations

Optimizely Content Recommendations is part of the Optimizely Digital Experience Platform (DXP) and is a great feature for analyzing website visitors' behavior and personalizing content to match their interests. Personalizing content can increase the site's attractiveness and improve the SEO ranking. It can also be used to fine-tune your Google Ads campaign by providing AI insights into specific KPIs.

SEO automation add-ons

SEO automation tools increase your ROI with three main features:

Here are some useful add-ons for analytics and automating SEO tasks.

Google Analytics for Optimizely

Install the Optimizely Google Analytics add-on to get a Google Analytics dashboard directly in the Optimizely user interface.

ContentKing

The third-party tool ContentKing uses real-time auditing and content tracking to monitor your website and define an SEO score for each page in a website.

Using the ContentKing CMS API, you can trigger priority page auditing through an API, telling ContentKing that a page has changed and requesting a re-evaluation of the SEO score. See this blog post by Mark Prins on how to integrate ContentKing with Optimizely.

SiteImprove

The SiteImprove Website Optimizer is another free add-on found on the Optimizely Marketplace.

It streamlines workflows for your web teams and lets them fix errors and optimize content directly within the Optimizely editing environment. The Siteimprove add-on also has features like:

- Alerts editors of misspellings and broken links.

- Provides information on readability levels and accessibility issues (A, AA, AAA conformance level).

- Provides insights on different SEO aspects, such as technical, content, UX, and mobile.

- Displays information on page visits, page views, feedback rating, and comments.

404 handler

The 404 handler is a free add-on on the Optimizely NuGet feed. It gives you better control over your 404 pages and allows redirects for old URLs that no longer work.

See the GitHub repository documentation.

Robots files

- POSSIBLE.RobotsTxtHandler is a free add-on found on the Optimizely NuGet feed. It handles the delivery and modification of the

robots.txtfile. - The SIRO Sitemap and Robots Generator is a free add-on on Optimizely Marketplace. It lets you generate configurable and scalable dynamic

sitemap.xmlandrobots.txtfiles with support for multi-site, multilingual Optimizely solutions.

Related topics

- Search Engine Optimization (SEO) Starter Guide by Google

- How Search Engines Work: Crawling, Indexing, and Ranking by Moz

- Search Quality Evaluators Guidelines by Google

- The Web Developer's SEO Cheat Sheet by Moz

- SEO Cheat Sheet: Anatomy of a URL by Moz

- Responsive images with Episerver and ImageResizer by Creuna

Updated 3 months ago