Use whitelisting

Note

The QA approaches here are used to put yourself into a specific variation of an A/B Test or Feature Test. They aren't supported for rollouts yet.

In Full Stack projects, you can use whitelisting to QA your experiments. Publish your experiment live and enable whitelists to show specified variations to a few, select users. When you activate the experiment for these users, they can bypass audience targeting and traffic allocation to always see the variation you specify for them. Users who aren't whitelisted must pass audience targeting and traffic allocation to see the live experiment and variations.

For example, imagine that you create an experiment that compares Variation A and Variation B. You want to QA the experiment's live behavior and show the variations to a few key stakeholders. Create a whitelist that includes the user IDs for the people who should see the live experiment.

To ensure that only your whitelisted users can see the experiment, create an audience targeted to an attribute no user will have or set the experiment's traffic allocation to 0%. After QA is complete, establish your production settings for audience targeting and traffic allocation.

Optimizely allows you to whitelist up to 10 users per experiment.

Whitelists are included in your datafile in the forcedVariations field. You don't need to do anything differently in the SDK; if you've set up a whitelist, experiment activation will force the variation output based on the whitelist you've provided. Whitelisting overrides audience targeting and traffic allocation. Whitelisting does not work if the experiment is not running, but you can start an experiment to 0% traffic or in a staging environment to test with whitelisting.

Note

In general, we recommend setting up forced bucketing rather than using whitelisting because you can set the variation in real time. In contrast, whitelisting require waiting for your app to synchronize the datafile.

When to use whitelisting

Use whitelisting only for preview, experimenting, and QA. You can also use whitelisting when you're unit testing your experiments in code with a mock datafile.

You can whitelist up to 10 user IDs. We limit you to a maximum of 10 whitelisted users per experiment because:

- Forcing variations with a large number of user IDs will bias your experiment results.

- Whitelisting increases the size of the datafile.

To target an experiment to a larger group of users for QA, such as all employees in your organization or a staging environment, use audiences instead. Create an attribute that every user in the group will share, and target the experiment to an audience that contains that attribute.

Create a whitelist

Here's how to create a whitelist for an experiment in a Full Stack project.

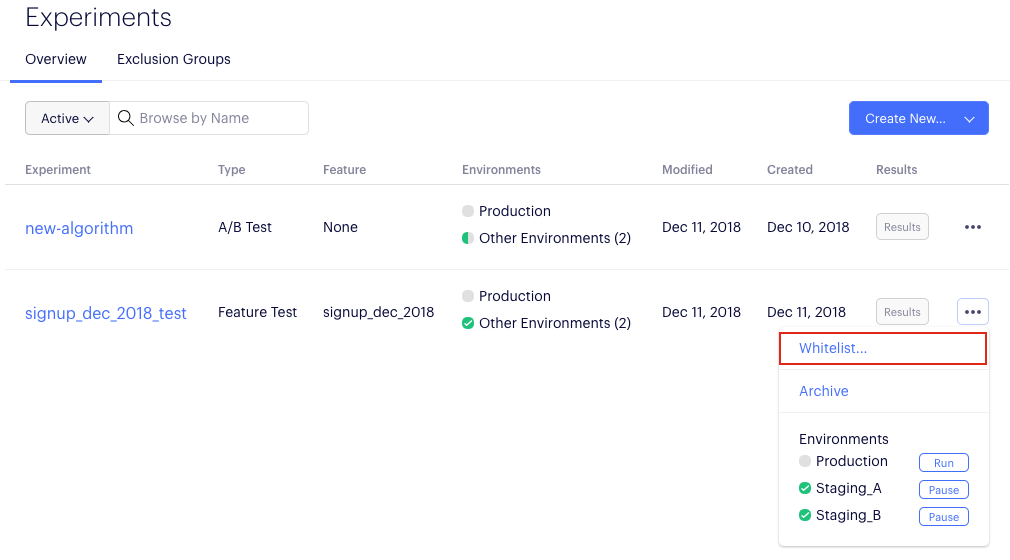

- Navigate to the Experiments dashboard.

- Click the Actions icon (...) for the experiment and select Whitelist.

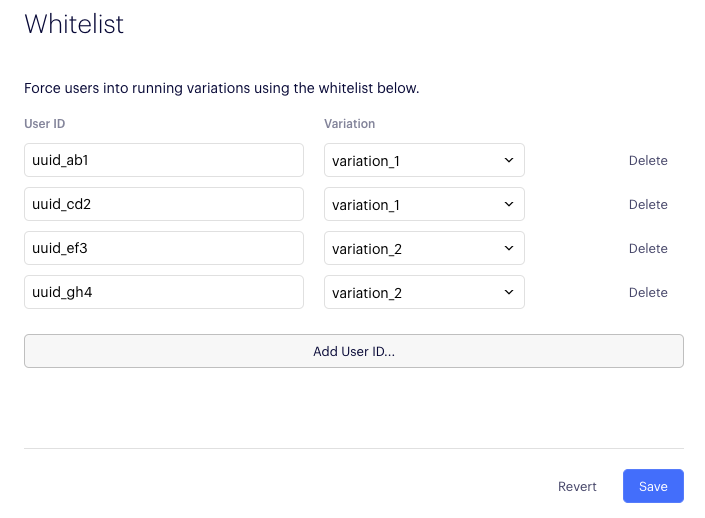

- Specify user IDs and corresponding variations you want to force for those users.

In this example, we forced two visitors into variation_1 and two visitors into variation_2.

- Pass in the whitelisted user IDs to the SDK using the Activate method.

Important

The user IDs used in the whitelist must match the user IDs passed through the SDK in Activate. Otherwise, whitelisting will not work. These user IDs are often anonymous and cryptic (for example, a cookie value), and you have to copy and paste them.

Updated over 1 year ago