Datafile versioning and management

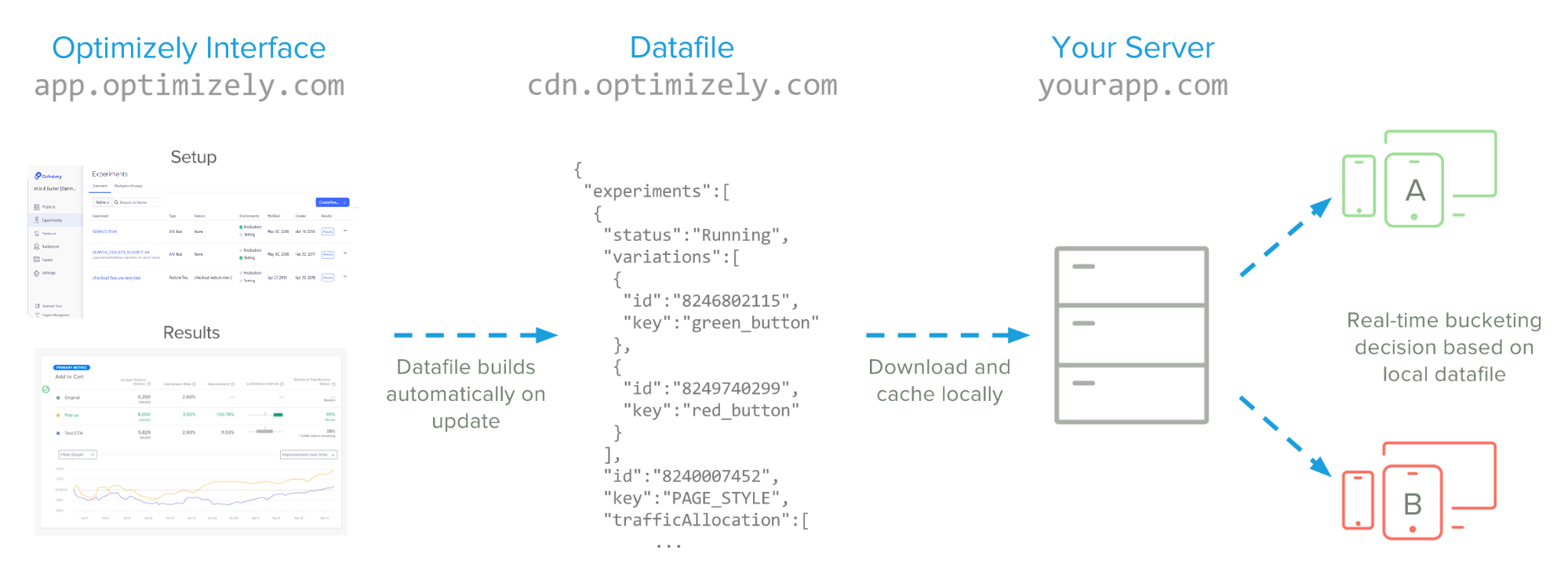

In Full Stack, you configure tests and variations in a web interface hosted on app.optimizely.com. Then, you implement an SDK in your application to bucket users into the right variations based on that configuration. The link between these two systems is called the datafile.

The datafile is a JSON representation of all the tests, audiences, metrics, and other elements that you’ve configured in a project. Here is how to access the datafile for a Full Stack project.

When you make a change in Optimizely's interface, like starting a test or changing its traffic allocation, Optimizely builds a new revision of the datafile and serves it on cdn.optimizely.com. To incorporate this change, your application downloads the new datafile and caches it locally to make decisions quickly. We call this process datafile synchronization.

There are several approaches for synchronizing datafiles, depending on your application’s needs. These approaches involve a trade-off between latency and freshness. Finding the right balance ensures that your datafile stays up-to-date without slowing down your application. This guide walks through the best practices and alternatives for striking this balance.

There is no right answer for managing the datafile because every application has different implementation and performance constraints. We recommend evaluating these options and standardizing your approach to datafile management by building a wrapper around our SDKs. This wrapper can also capture other context-specific options like event dispatching and logging.

Note

This topic focuses on managing datafiles in server-side contexts. For our mobile iOS and Android SDKs, datafile management is implemented out-of-the-box. For more information about using datafiles on mobile platforms, see:

Datafile versioning for Full Stack projects

To maintain backwards compatiblity with older SDK versions, Optimizely maintains multiple versions of the datafile. Datafile versioning ensures that apps that have not been upgraded to the latest version of the SDK can still run experiments. For each SDK project, Optimizely uploads the datafile once per datafile version.

The version refers to the datafile schema. This is universal and not tied to a specific account or project. The version number is there for backward compatibility.

For example, for project ID "123," if we have 3 versions of the datafile, we will upload the following three files to the CDN:

<https://cdn.optimizely.com/public/12...tafile_v1.json>

<https://cdn.optimizely.com/public/12...tafile_v2.json>

<https://cdn.optimizely.com/public/12...tafile_v3.json>

The dashboard always shows the path to the latest datafile version. In this case, that would be <https://cdn.optimizely.com/public/12...tafile_v3.json>. The latest version would also be accessible at <https://cdn.optimizely.com/json/123.json>.

If you’re using Full Stack SDKs to manage synchronizing the datafile from Optimizely, you don’t need to interface with different datafile versions directly. The SDK will make sure to use older versions of the datafile for older versions of the SDK.

Understanding the tradeoffs

To understand the tradeoffs in datafile management, it helps to consider the most naive approach.

Imagine that every time a test runs, you fetch the latest datafile, then use it to initialize an Optimizely client and make a decision. This approach guarantees you the latest datafile, but it comes at a major performance cost. Every decision requires a round-trip network request. In asynchronous contexts like SMS or chatbots, this can work.

However, for synchronous use cases like a web server or API, you can cache a local copy of the datafile within your application, then synchronize it periodically instead. This lets you make test decisions immediately, without waiting for a network request, while keeping the configuration up-to-date.

For example, you can set up a timer to re-download the datafile every 5 minutes and store it in memory, then read from there every time you make a request. Or, you could use a webhook to keep a centralized service in sync and make internal HTTP requests for the datafile. As these examples illustrate, there are several choices to consider when implementing datafile synchronization:

- Where to store the datafile: Locally in memory, on the filesystem, or on a separate service.

- When to update it: Via a “pull” model that polls for updates on a regular interval, or by listening for a “push” from a webhook.

The sections below walk through the best practices and trade-offs for each approach.

Storage options

The most common storage approach is to store the JSON string of the datafile itself. This approach is sufficient in many cases, but parsing JSON can take up to 100 ms depending on the language, load, and datafile size. Even when parsing is much faster, be careful with implementations that require repeated JSON parsing. For example, if you re-instantiate the SDK within a loop, the performance cost can quickly add up.

If you do need to instantiate repeatedly, pass in the already-parsed object or previously-instantiated Optimizely client rather than the raw datafile.

Either option gives you the flexibility to choose a format for caching the datafile.

Best performance

We recommend storing the datafile directly in memory. You'll be able to look up the datafile with near-zero latency, so your web service stays performant. In simple applications, you accomplish this by instantiating the Optimizely object directly and passing it around as needed. In more complex applications, you can use tools like Memcached.

Multiple processes need to share the same datafile configuration

We recommend keeping the datafile in local storage. For example, you can keep the JSON file directly on your local file system, which is generally slower than a memory lookup but faster than a network request. Alternatively, you can store the datafile in a distributed store like Redis. In general, we recommend systems that allow fast reads and relatively fast writes.

Colocated services

If you have many colocated services that all need to operate off the same datafile, consider hosting the Full Stack SDK as an independent service. This service can expose SDK methods like activate()and track() as HTTP endpoints that other services can hit. Then, you can implement datafile synchronization within the service using any of the methods above. This approach adds a small latency hit from the internal network request, but it makes implementing in a microservice architecture substantially easier, especially if you have many different types of services operating in different languages. The centralized endpoint allows you to implement the logic just once, rather than separately in each service

Update options

The other key consideration is when to update the datafile. In general, you have two choices: pull or push. We recommend using both approaches together, if possible. Use a webhook (push) as the primary means for keeping your datafile up to date, but keep polling (pull) at regular intervals in case the webhook fails.

Pull updates

The “pull” approach consists of polling the Optimizely CDN on a regular interval and updating the stored datafile whenever a new revision is available. Polling is generally easy to implement through a timer or CRON job.

Pulling works best if you don’t need instant updates. For example, if you’re comfortable with pressing the “pause” button on an A/B test that’s performing badly and waiting a while for the change to percolate to your users, polling on a 5- or 10-minute interval is fine.

Push updates

If you need faster updates, such as every time a feature flag is toggled, we recommend “pushing” the changes as soon as you make them. You can configure a webhook to ping your server as soon as a change is made so you can pull the update down immediately. For an example of webhooks in action, see our Python demo app.

This is the preferred approach for server-side contexts with a reliable network connection, but it doesn’t usually apply for web and mobile clients.

Fetch the datafile

You can Get the datafile. Accessing the datafile via the REST API ensures that you get the most up-to-date changes, but the download itself may take slightly longer and you will need to authenticate with an API token.

Updated 11 months ago