Run basic feature tests

If you're new to experimentation, you can get a lot done with a simple ON/OFF feature test. This test configuration has one feature with two variations:

- One variation configured to "toggle=ON"

- One variation configured to "toggle=OFF"

This basic setup looks similar to a feature rollout, but you'll find that it enables you to powerfully compare how the new feature affects operational and product metrics for your application.

For more advanced feature tests that include remote feature configuration, see Run tests with feature configurations.

Restrictions

you can run only one feature test at a time on each feature flag.

Setup overview

Each feature test runs on a feature flag.

- Create a feature test in the Optimizely app. See create a feature test section.

- Integrate the example code that the Optimizely app generates with your application. See implement the feature test section.

- (Optional) Configure and add an audience, or group of users you will target. See Add audience section.

- (Optional) Configure traffic.

- Implement track events. See Track events.

- Configure events into experiment metrics. Choose metrics.

- QA your experiment in a non-production environment. See Choose QA tests.

- Discard any QA user events, and enable your experiment in a production environment.

Create a feature test

To create a new feature test in the Optimizely web app:

- Navigate to Experiments > Create New...

- From the menu, Select Feature Test.

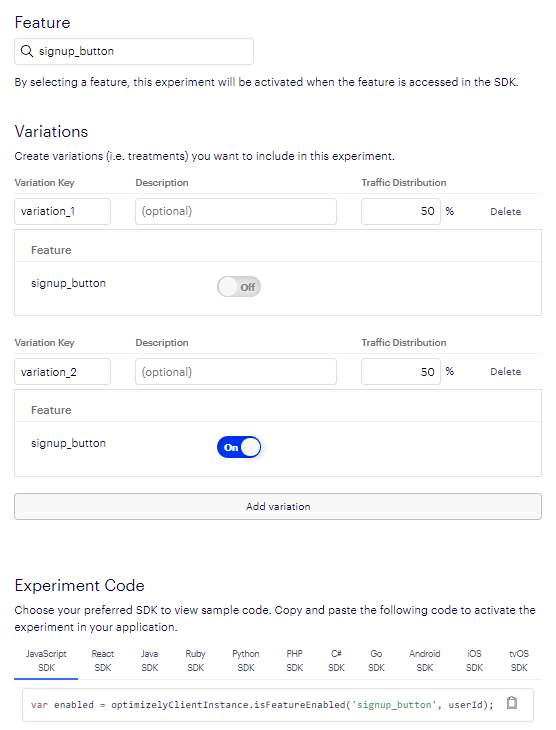

- Select an existing feature or select Create New Feature to add a new feature. Optimizely will automatically create:

- An experiment key by appending “_test” to the end of the feature key for the feature you selected

- Two variations (variation_1 and variation_2). One is enabled, and one is disabled.

- Example integration code

For a basic test, you can leave the defaults as they are. For example:

Example of a basic feature test

Implement the feature test

You need to integrate the feature test with your application code, so that Optimizely can tell your application to run the code that is the appropriate experience for a given user. Copy the sample integration code into your application code and edit it so that:

- Your feature code runs or does not run, based on the output of Is Feature Enabled that it receives from Optimizely.

- Edit the userID variable in accordance with how you Handle user IDs.

Add an audience

You add an audience to your experiment in the Optimizely app:

- Create an audience. See Target audiences.

- Navigate to Experiments and select your feature test.

- In the sidebar, navigate to Audiences and the audience to your experiment.

Configure experiment traffic

You can configure traffic for your experiment in two places in the Optimizely app (you don't need to write any code to integrate with your application):

- To manage traffic distribution (in other words, the split of traffic across the variations of your feature test), navigate to Experiments > Variations. Optimizely sets even traffic distribution across all variations in an experiment by default.

- To manage traffic allocation (in other words, the fraction of an audience that is eligible for the experiment), navigate to Experiments > Traffic Allocation.

Note that if you plan to change the traffic after you start running the experiment, you will need to implement a user profile service before starting the experiment. For more information see Ensure consistent visitor bucketing.

NoteFeature tests evaluate before rollouts

Optimizely evaluates traffic in the context of a feature test first, and feature flag second. If a feature you created has both a feature flag and a feature test turned on, the feature test will be evaluated first.

For example, imagine that you configure a feature test so that 80% of traffic allocated to the feature test, and 20% is not. The feature flag underneath is rolled out to 50% of traffic.

- 80% of eligible traffic will enter your feature test, and will be randomly assigned to a variation.

- 50% of the remaining traffic (20% not bucketed into the feature test) will be allotted to the rollout. 10% of your total eligible traffic will see this feature flag.

For more information, see Interactions between feature tests and rollouts.

Updated 6 months ago