Run A/B tests

Explains the steps necessary to run an A/B test with Optimizely Full Stack.

Not all experiments are tied to specific features you have already flagged in your code. Sometimes, you will want to run a standalone test to answer a specific question: which of two (or more) variations performs best? For example, is sorting the products on a category page by price or category more effective?

These one-off experiments are called A/B tests, as opposed to feature tests that run on features you have already flagged. With A/B tests, you define two or more variation keys and then implement a different code path for each variation. From the Optimizely interface, you can determine which users are eligible for the experiment and how to split traffic between the variations, as well as the metrics you will use to measure each variation's performance.

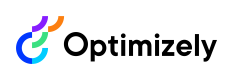

1. Select A/B Test in your project

In the Experiments tab, click Create New Experiment and select A/B Test.

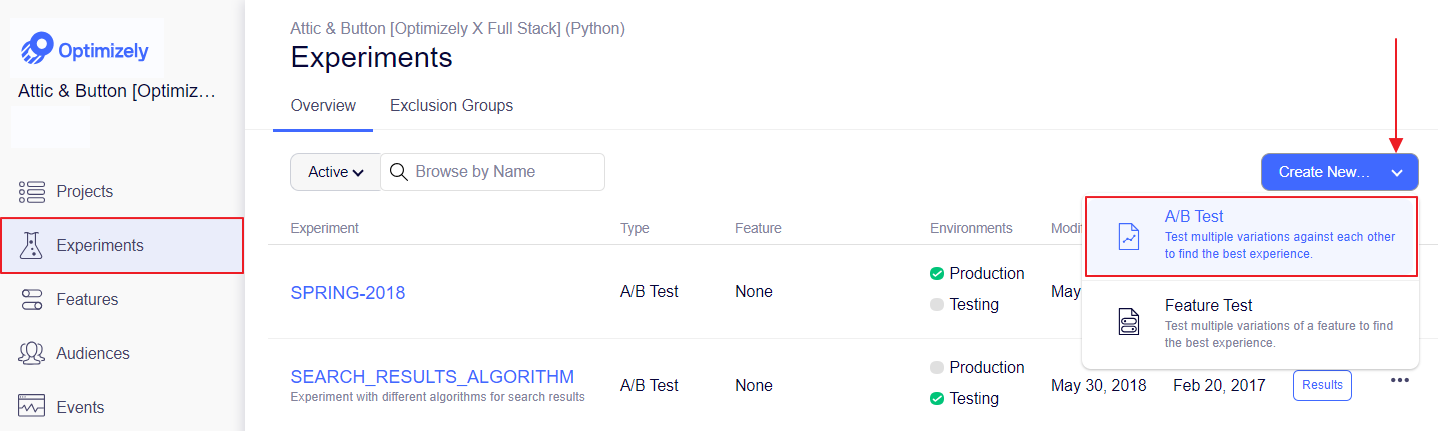

2. Set an experiment key

Specify an experiment key.

Your experiment key must contain only alphanumeric characters, hyphens, and underscores. The key must also be unique for your Optimizely project so you can correctly disambiguate experiments in your application.

ImportantDo not change the experiment key without making the corresponding change in your code.

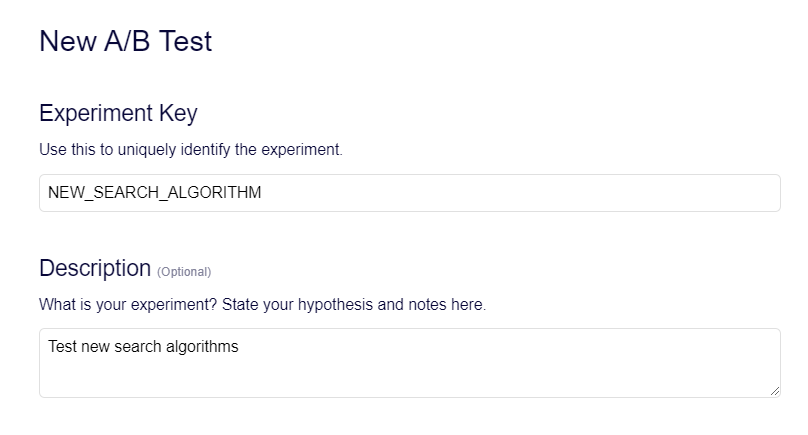

3. Set experiment traffic allocation

The traffic allocation is the fraction of your total traffic to include in the experiment, specified as a percentage.

For example, you set an allocation of 50% for an experiment that is triggered when a user does a search. This means:

- The experiment is triggered when a visitor does a search, but it will not be triggered for all users. 50% of users who do a search will be in the experiment, but 50% of users who do a search will not.

- Users who do not do a search also will not be in the experiment. In other words, the traffic allocation percentage may not apply to all traffic for your application.

You can stick with the default 50%/50% split that Optimizely sets you up with, or you can increase the traffic allocation to get to statistical significance faster.

For more information, see our help center article Changing traffic allocation and distribution in Optimizely.

Optimizely determines the traffic allocation at the point where you call the Activate method in the SDK.

You can also add your experiment to an exclusion group at this point.

4. Set variation keys and traffic distribution

Variations are the different code paths you want to experiment on. Enter a unique variation key to identify the variation in the experiment and optionally a short, human-readable description for reporting purposes.

You must specify at least one variation. There is no limit to how many variations you can create.

Use the Distribution Mode dropdown to select how you distribute traffic between your variations:

- Manual – By default, variations are given equal traffic distribution. Customize this value for your experiment's requirements.

- Stats Accelerator – To get to statistical significance faster or to maximize the return of the experiment, use Optimizely’s machine learning engine, the Stats Accelerator. For more information, see Get to statistical significance faster with Stats Accelerator. For information about when to use Stats Accelerator versus running a multi-armed bandit optimization, see Multi-armed bandits vs Stats Accelerator.

distribution mode

5. (Optional) Add an audience

You can opt to define audiences if you want to show your experiment only to certain groups of users. See Define attributes and Target audiences.

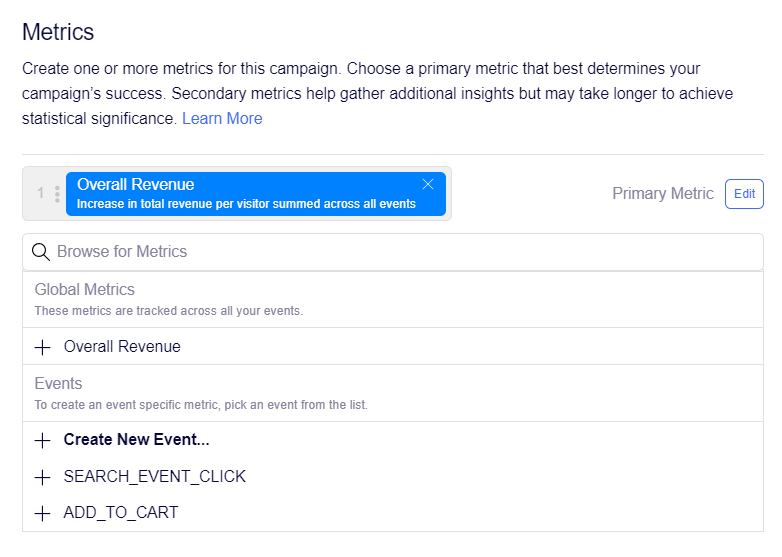

6. Add a metric

Add events that you’re tracking with the Optimizely SDKs as metrics to measure impact. Whether you use existing events or create new events to use as metrics, you must add at least one metric to a experiment. To re-order the metrics, click and drag them into place.

ImportantThe top metric in an experiment is the primary metric. Stats Engine uses the primary metric to determine whether an A/B test wins or loses, overall. Learn about the strategy behind primary and secondary metrics.

7. Complete your experiment setup

Click Create Experiment to complete your experiment setup.

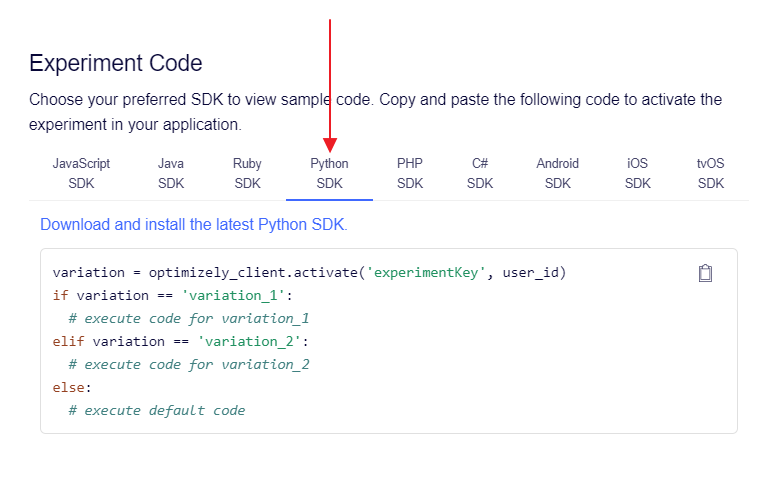

8. Implement the code sample into your application

Once you have defined an A/B test, you will see a code sample for implementing it in your application.

Activate method

Activate methodFor each A/B test, you use the Activate method to decide which variation a user falls into, then use an if statement to apply the code for that variation.

import com.optimizely.ab.android.sdk.OptimizelyClient;

import com.optimizely.ab.config.Variation;

// Activate an A/B test

Variation variation = optimizelyClient.activate("app_redesign", userId);

if (variation != null) {

if (variation.is("control")) {

// Execute code for "control" variation

} else if (variation.is("treatment")) {

// Execute code for "treatment" variation

}

} else {

// Execute code for users who don't qualify for the experiment

}using OptimizelySDK;

// Activate an A/B test

string userId = "";

var variation = optimizelyClient.Activate("app_redesign", userId);

if (variation != null && !string.IsNullOrEmpty(variation.Key))

{

if (variation.Key == "control")

{

// Execute code for variation A

}

else if (variation.Key == "treatment")

{

// Execute code for variation B

}

}

else

{

// Execute code for your users who don’t qualify for the experiment

}import com.optimizely.ab.Optimizely;

import com.optimizely.ab.config.Variation;

// Activate an A/B test

Variation variation = optimizelyClient.activate("app_redesign", userId);

if (variation != null) {

if (variation.is("control")) {

// Execute code for "control" variation

} else if (variation.is("treatment")) {

// Execute code for "treatment" variation

}

} else {

// Execute code for users who don't qualify for the experiment

}// Activate an A/B test

var variation = optimizelyClientInstance.activate('app_redesign', userId);

if (variation === 'control') {

// Execute code for "control" variation

} else if (variation === 'treatment') {

// Execute code for "treatment" variation

} else {

// Execute code for users who don't qualify for the experiment

}// Activate an A/B test

var variation = optimizelyClient.activate("app_redesign", userId);

console.log(`User ${userId} is in variation: ${variation}`);

if (variation === "control") {

// Execute code for "control" variation

} else if (variation === "treatment") {

// Execute code for "treatment" variation

} else {

// Execute code for users who don't qualify for the experiment

}#import "OPTLYVariation.h"

// Activate an A/B test

NSString *variationKey = [optimizely activateWithExperimentKey:@"app_redesign"

userId:@"12122"

error:nil];

if ([variationKey isEqualToString:@"control"]) {

// Execute code for "control" variation

} else if ([variationKey isEqualToString:@"treatment"]) {

// Execute code for "treatment" variation

} else {

// Execute code for users who don't qualify for the experiment

}// Activate an A/B test

$variation = $optimizelyClient->activate('app_redesign', $userId);

if ($variation == 'control') {

// Execute code for "control" variation

} else if ($variation == 'treatment') {

// Execute code for "treatment" variation

} else {

// Execute code for users who don't qualify for the experiment

}# Activate an A/B test

variation = optimizely_client.activate('app_redesign', user_id)

if variation == 'control':

pass

# Execute code for "control" variation

elif variation == 'treatment':

pass

# Execute code for "treatment" variation

else:

pass

# Execute code for users who don't qualify for the experiment# Activate an A/B test

variation = optimizely_client.activate('app_redesign', user_id)

if variation == 'control'

# Execute code for "control" variation

elsif variation == 'treatment'

# Execute code for "treatment" variation

else

# Execute code for users who don't qualify for the experiment

end// Activate an A/B test

if let variationKey = try? optimizely.activate(experimentKey: "app_redesign",

userId: "12122") {

if variationKey == "control" {

// Execute code for "control" variation

} else if variationKey == "treatment" {

// Execute code for "treatment" variation

}

} else {

// Execute code for users who don't qualify for the experiment

}The Activate method:

- Evaluates whether the user is eligible for the experiment and returns a

variation key if so. For more on how the variation is chosen, see How bucketing works and the SDK reference guide for theActivatemethod. - Sends an event to Optimizely to record that the current user has been exposed to the A/B test. You should call

Activateat the point you want to record an A/B test exposure to Optimizely. If you do not want to record an A/B test exposure, use theGet Variationmethod instead.

NoteIf any of the conditions for the experiment are not met, the response is

null. Make sure that your code adequately handles this default case. In general, you will want to run the baseline experience.

Updated 6 months ago